On the Relativity of Recognizing the Products of Emergence and the Nature of the Hierarchy of Physical Matter

Kurt A. Richardson

Introduction

On the matter of privileging a so-called 'scientific method' over all other methods in the discovery of 'truth' the philosopher Paul Feyerabend said:

It is thus possible to create a tradition that is held together by strict rules, and that is also successful to some extent. But is it it desirable to support such a tradition to the exclusion of everything else? Should we transfer to it the sole rights for dealing in knowledge, so that any result that has been obtained by other methods is at once ruled out of court?8

Like Feyerabend my answer to the question is "a firm and resounding NO." In this chapter I would like to explore my reasons for this answer by exploring the ontological status of emergent products. I am particularly interested in demonstrating the relativity of recognising the products of complexity, i.e., that emergent-product recognition is problematic and not 'solved' by the application of one particular method over all others. I would also like to explore the consequences of this in regard to the hierarchy of physical matter and the hierarchy of human explanations.

A Theory of Everything

My starting point for such an exploration is the assumption that the Universe, at some level, can be well-described as a cellular automaton (CA).

Of course by selecting this starting point I am making the assertion that not only does some form of cellular automata represent a plausible theory of everything, but that a theory of everything does indeed exist. This would seem to be a radically reductionist starting point, but I am claiming a lot less than may at first appear. Rather than defend this starting point in the main part of the chapter, I have added a short appendix that discusses the reasonableness of the CA assumption. One thing is clear, a theory of everything doesn’t even come close to offering explanations for everything so we needn’t get too concerned about the 'end of science' or human thinking just because such a theory might exist and might be discoverable.

Whether one accepts my starting point or not it should be recognized that this is a philosophical exercise; to explore the ontological and epistemological implications of assuming that the Universe is well-described as a CA at some level. It doesn’t necessarily follow that the Universe actually is and only is a CA. The details of the CA, however, do not change the philosophical conclusions. Richardson15 has explored these issues at length and argued that, whether or not a CA model is a plausible theory of everything, a philosophy of complexity based on this assumption does seem to have the capacity to contain all philosophies, arguing that each of them is a limited case of a general, yet empty, philosophy (akin to the Buddhist 'emptiness'. This seems to be a fantastically audacious claim, particularly given the heated debate nowadays over global (intellectual) imperialism. However, one should realise that the resulting general philosophy is quite empty - which I suppose is what we would expect if looking for a philosophy that was valid in all contexts - and explicitly avoids pushing to the fore one perspective over any other. Quoting Feyerabend8 again, "there is only one principle that can be defended under all circumstances and in all stages of human development. It is the principle: anything goes." In a sense, all philosophies are a special case of nothing!

So, What is Emergence?

In the founding issue of the journal Emergence, Goldstein10 offers the following description of 'emergence':

Emergence ... refers to the arising of novel and coherent structures, patterns, and properties during the process of self-organization in complex systems. Emergent phenomena are conceptualized as occurring on the macro level, in contrast to the micro-level components and processes out of which they arise.

For the rest of this paper I would like to consider how patterns and properties emerge in simple CA, and how the macro is distinguished from the micro. I will argue that the recognition of emergent products, which is often how the macro is distinguished from the micro, is method dependent (and therefore relativistic to a degree). The recognition of emergent products requires an abstraction away from what is, and as with all abstractions, information is lost in the process. Of course, without the abstraction process we would have all information available which is no better than having no information at all. "Only a tiny fraction of [the richness of reality] affects our minds. This is a blessing, not a drawback. A superconscious organism would not be superwise, it would be paralyzed?"9. I do not intend to offer a full review of the term 'emergence' here. The interested reader might find Goldstein10 or Corning3,4 useful as additional sources on the evolution of the term itself.

Complex cellular automata and patterns

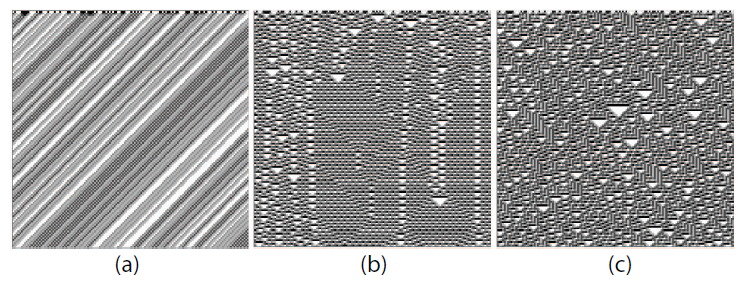

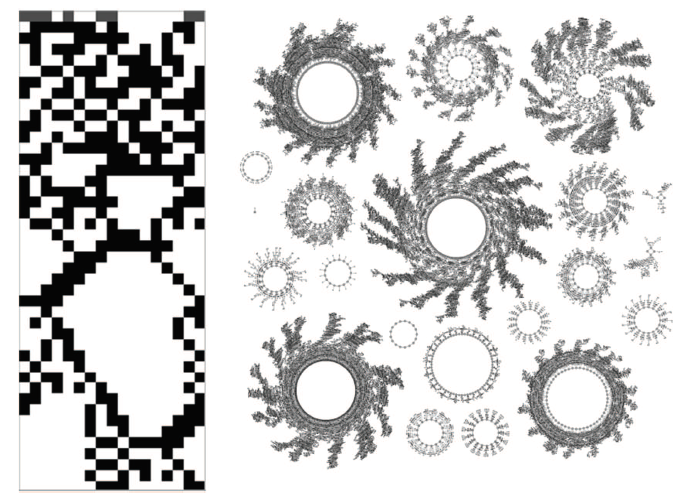

Figure 1b shows part of the space-time diagram for the simple CA &36 (n = 20, k = 3). Even though the initial conditions selected for the particular instance of CA were selected randomly, a pattern emerges that is neither totally ordered (an example of which is given in Figure 1a) nor quasi-chaotic, or quasi-random (Figure 1c). CA &36 is an example of a (behaviorally) complex CA as defined by Wuensche19. There isn’t a lot else we can say about the pattern (although there are a wealth of statistical analyses we might perform to quantify certain aspects of the pattern) that emerges except that maybe it is rather aesthetically pleasing and that its appearance from such a simple set of rules, as well as a random initial configuration, is quite a surprise. So even though it is often said that these patterns emerge from the application of the CA rule, it is not particularly clear as to what is emerging - what are the emergent products here? Is it appropriate to suggest that the space-time pattern is emergent, given that it is a perfect image of the 'micro'-level? What would the macro level be in this example? It is not immediately clear at all.

There is no autonomy in this example, as all cells are connected to all others - the pattern is simply the result of applying the CA rule time step after time step. One thing we can say is that the pattern was not wholly predictable from the CA rules, and so in that sense the pattern is novel given our lack of knowledge of it before the system was run forward. But did it really emerge? Or, are our analytical tools just too feeble to characterize the patterns beforehand, in which case the fact that the patterns are regarded as emergent simply reflects our lack of knowledge about this simple system - our theories are simply not powerful enough. Following Bedeau1, Goldstein10 refers to this aspect of emergence as ostensiveness, i.e., the products of emergence are only recognized by showing themselves1. This is also linked to the idea of radical novelty10 , meaning that the occurrence of these patterns was not predictable beforehand2.

Figure 1. Examples of (a) ordered, (b) complex, and (c) chaotic automatons

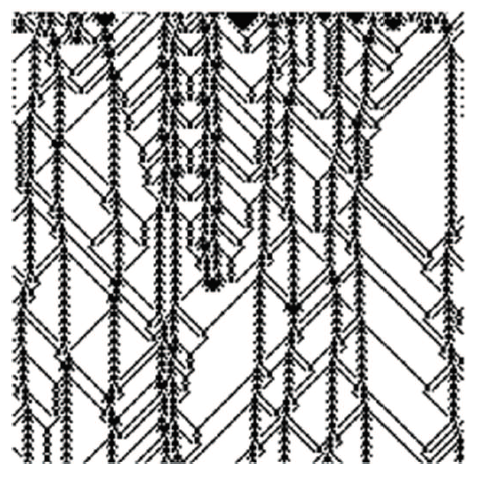

These patterns are of interest in that they illustrate some aspect of the concept emergence3, but they do not tell us much at all about the dynamics of the space-time plot. If we look very closely however we begin to notice that there are patterns within the patterns. The triangles have different sizes and their evolution (shape and movement) 'down' the space-time plot seems to indicate some kind of interaction between local areas of the overall pattern. We might also notice that certain areas of the space-time plot are tiled with a common sub-pattern - a pattern basis. Hansen and Crutchfield11 demonstrated how an emergent dynamics can be uncovered by applying a filter to the space-time plot. Figure 2 shows the result of a 'pattern-basis filter' applied to a particular instance of the space-time plot of CA rule &36. Once the filter is applied an underlying dynamics is displayed for all to see. What can we say about this alternative representation of the micro-detailed space-time plot?

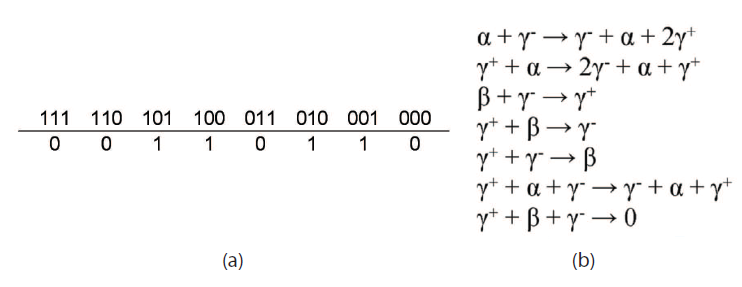

Firstly, we can develop an alternative macro-physics for this apparent dynamical behavior that is entirely different (from a language perspective at least) from the micro-physics of the CA. If we see these two 'physics' side by side (Figure 3) it is plainly clear how different they are. The microphysics description uses a micro-language16 (which is sometimes referred to as the 'language of design') comprised only of 0s and 1s, whereas the macrolanguage is comprised of an entirely different alphabet: α, β, γ+, γ-. There is no simple mapping between these two languages/physics, i.e., there is no way (currently) to move between the two languages without some kind of information loss - the translation is lossy (as it is in the translation of human languages). As Standish17 remarks, "An emergent phenomenon is simply one that is described by atomic concepts available in the macrolanguage, but cannot be so described in the micro-language"(p. 4). This imperfect relationship comes about because, although the macrophysics is a good theory, it is not a complete description of the micro-physics, it captures some of the details but not all. A qualitative change in language is equivalent to a change in ontology, but what is the ontological status of the emergent products?

Figure 2. CA &36 after pattern basis filtering

At the micro-level, only cells (that can only be in states '0' or '1') absolutely exist. But can we say with equal confidence that α-particles, for example, exist? Given the filtered version of the space-time diagram showing the apparent evolution of a set of quasi-particles, as well as their compelling macro-physics, it is very tempting indeed to answer in the affirmative.

Figure 3. (a) The micro-physics of level 0 and (b) the macro-physics of level 1

However, we need to bear in mind how the original patterns were created and how we uncovered the quasi-particle dynamics. The patterns were generated by recursively applying a micro-rule to each cell in the system. Each cell is treated exactly the same as the others - none receive any special or unique treatment. The application of the rule does not change as the system configuration evolves. And, most importantly, each cell in the configuration depends on the states of all the other cells - a particular sequence of 0s and 1s representing the evolution of a particular cell, only occurs that way because all the other cells evolve in their particular way. Although the rule is only applied locally, the dynamics that emerge are a result of global interactions. So α-particles only emerge because β-particles emerge (as well as the other particles) - the emergence of α-particles does not occur independently of the emergence of β-particles. As a result it would be a mistake to assume that some absolute level of ontological autonomy should be associated with these particles, though of course the macro-physical description provides evidence that making such an assumption is for many intents and purposes useful. However, as with all assumptions, just because making the assumption leads to useful explanations, it does not follow that the assumption is correct. Even the motion of these particles is only apparent, not real; it is simply the result of reapplying the CA rule for each cell at each time step. Whilst discussing Conway’s Game-of-Life (a form of two-dimensional CA), philosopher Daniel Dennett6 asks:

[S]hould we really say that there is real motion in the Life world, or only apparent motion? The flashing pixels on the computer screen are a paradigm case, after all, of what a psychologist would call apparent motion. Are there really gliders [or, quasi-particles] that move, or are there just patterns of cell state that move? And if we opt for the latter, should be say at least that these moving patterns are real?

Choosing '0' and '1' to represent the two cell states perhaps further suggests the illusion of motion and ontological independence.

The choice of '0' and '1' is a little misleading as it can easily be interpreted as being 'on' and 'off' or 'something' and 'nothing'. Whether a cell is in state '0' or '1', the cell itself still exists even if a 'black square' is absent. We could easily use '×' and '~' to represent the two states and the result would be the same. In uncovering the macro-dynamics we had to, however reasonable it may have seemed, choose a pattern-basis to be 'removed' or filtered-out. By clearing-out the space-time diagram we allowed 'defects' in the space-time diagram to become more clearly apparent. We also chose to set to '0' those areas of the space-time diagram that were tiled evenly with the pattern-basis, and '1' those regions which represented distortions in the pattern-basis; we assumed an even 'background' and then removed it to make visible what was in the 'foreground'4. Again, it is tempting to interpret the '0'-covered (white areas) as 'nothing' and the '1'-covered (black) areas as 'something'. This would be incorrect however, as all the cells that comprise the CA network are still there, we have simply chosen a representation that helps us to 'see' the particles and ignore the rest. However, it must be noted that though '0' and '1' do not map to 'nothing' and 'something' at the CA-substrate level, this is exactly the mapping made at the abstracted macro-level.

So in any absolute sense, the emergent products do not exist as such. They do however exist in the alternative (although deeply - complexly - related) macro-level description of the CA. The change in perspective is equivalent to an ontological shift, where the existence of the emergent products is a 'feature' of the new perspective though not of the absolute CA perspective; what existed at the micro-level does not exist at the macro-level and vice versa. This new perspective is an incomplete description, yet the fact that the new description is substantially complete would suggest the substantial realism of the particles on which the new description is based. For this reason we say that though the particles do not absolutely exist, they do indeed exhibit substantial realism and as such, for many intents and purposes, can be treated as if they were real (for this reason they are often referred to as '?quasi-particles' rather than just 'particles').

Quoting Feyerabend again,

Abstractions remove the particulars that distinguish an object from another, together with some general properties... Experiments further remove or try to remove the links that tie every process to its surroundings - they create an artificial and somewhat impoverished environment and explore its peculiarities.9

The process of abstraction is synonymous with the filtering process described above. Feyerabend goes on to say (p. 5):

In both cases, things are being taken away or 'blocked off' from the totality that surrounds us. Interestingly enough, the remains are called 'real', which means they are regarded as more important than the totality itself. (italics added)

So, what is considered real is what still remains after the filtering process is complete. What has been removed is often called background 'noise', when really what we have accomplished is the removal of 'details' not 'noise', for the sake of obtaining a representation that we can do 'science' with.

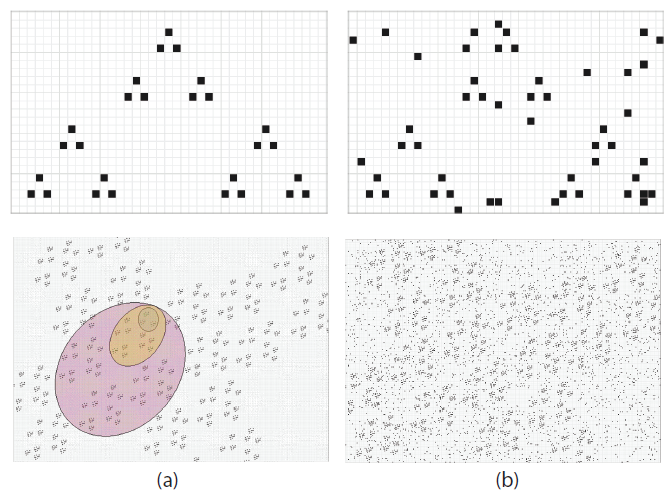

The Hierarchy of Reality: Nested versus Convoluted

This view of existence changes the popular image regarding the hierarchy of physical matter considerably. The popular image of the hierarchy of matter (illustrated in Figure 4a5) is that starting with atoms (for argument sake - of course more fundamental (or, more real) entities are known to the physical sciences) molecules are formed which are little more than agglomerations of atoms. Here, atoms are regarded as the 'micro' and molecules are regarded as the 'macro', and the filter applied is some mysterious process known as the 'scientific method' (although any competent and experienced scientist would rightly question the existence of such a universal method). From molecules, molecular complexes are formed, from

which cells are formed, from which multi-cellular organisms are formed, and so on right up to galactic clusters and beyond. In such a neat nested hierarchy every 'entity' has a place which distinguishes it from other entities as well as determining which 'science' should be employed to understand it. Furthermore, every 'thing' at one particular 'level' in the hierarchy is made-up off those 'things' said to exist at the 'level' below. This would seem to suggest that molecular physics/chemistry, say, should in principle be derivable from atomic physics. However, even though such a trick is supposedly possible (assuming such a neat hierarchical organization), in reality scientists are very far indeed from pulling off such a stunt. Nowadays the reason offered for this is simply the intractability of emergence which is well known to nonlinear researchers. Rather than derive biology from chemistry, scientists are forced to assume that the 'entities' of interest (which are the result of a particular filtering, or coarse-graining) to their particular domain do exist as such. Once such a step is taken, a science can be developed independently of the science that deals with the supposedly 'simpler' 'lower-level' 'entities'. Making such an assumption (which is often made implicitly rather than explicitly) has worked out rather well, and an enormous amount of practical understanding has been accumulated that allows us to manipulate these 'entities' in semi-predictable ways, even if they don’t happen to truly exist! Technology is the obvious example of such success.

Non-composite secondary structures

As well as the background 'pattern basis' and the fundamental particles listed in Figure 3, CA &36 "supports a large number of secondary structures, some having the domain and particles as building blocks, some not describable at the domain/particle level"11 . Though it has been acknowledged above that a complete 'physics' of one level cannot be derived from the 'physics' of the layer below, it is often assumed that the lower-level contains all that is necessary to allow the higher level to emerge. This assumption needs to be explored further, but just because we don’t have access to a perfect mapping from the lower level to the next level up, it doesn’t mean that some day such a bootstrapping method will not be uncovered.

In the CA model the fundamental 'lowest' level - level 0, say - does indeed contain 'everything' there is to know about the CA universe; the space-time diagram is indeed a complete description. However, the move to the particle level description - level 1 - is an imperfect ontological shift. As such, the set of quasi-particles describing the particle-level description is not sufficient to describe all the structures that are observed at the lower level (as a result of 'background' filtering), i.e., one cannot reverse the filtering process and perfectly reconstruct the micro-level. Hansen and Crutchfield’s discovery that secondary structures also exist, indicate that another level of 'existence' - level 2 - can be uncovered. If all of these secondary structures were made up of the particles already described (α, β, etc.) then we could argue that a 'composite' particle level (2) exists 'above' the quasi-particle level (1). In the natural sciences this would suggest that chemistry is indeed derivable from physics, or that all of chemistry emerges from all of physics. However, the existence of "secondary structures" that are not "describable at the domain/particle level" suggests that there are entities existing at the higher composite level (2) that can’t possibly be explained in terms of the particle level (1) description, even if intractability was not an issue, i.e., there exist higher-level entities that are not combinations of α, β, or γ quasi-particles. Another way of saying this is that matter is not conserved between these ascending levels. Assuming these observations have merit in the 'real' world, this would suggest for example that chemistry cannot possibly be derived from physics in its entirety. This perhaps is no great surprise; physics is an idealization and so a chemistry derived directly from such a physics could only represent an idealistic subset of all chemistry even if we could somehow overcome the computational irreducibility of predicting the macro from the micro.

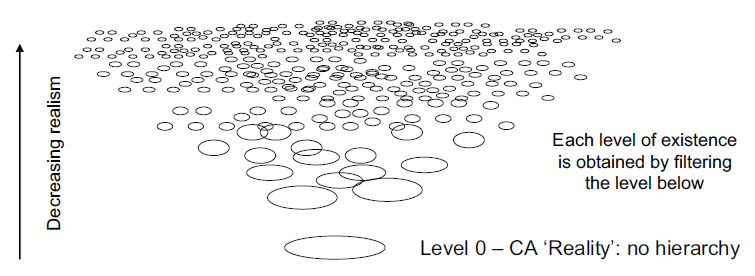

Figure 4. (a) Abstracted 'reality' versus (b) reality?

This observation indicates a different meaning to the aphorism "the whole is greater than the sum of the parts." Traditionally this has only really meant that, because of networked causality, the properties of the whole can not be directly derived from an analysis of the interacting parts; basically, even though our ontology is (assumed to be) correct, our analytical tools aren’t quite up to the challenge of reconstructing the whole from its parts. Novelty in this particular interpretation simply comes from the fact that our 'science' is unable to predict the macroscopic behavior from microscopic. The existence of 'non-composite secondary structures' suggests that, even if we could bootstrap from the micro to the macro, the 'parts' do not offer a complete basis from which to derive the 'whole'6 ; the 'whole' is not greater than the sum of its 'parts' because the 'whole' is not even constructed from those 'parts' (except in idealized computer models). In this context we would expect to observe chemical phenomena that could never be explained in terms of physics (however complete physics might get - in this view of the Universe physics can never be complete), and that might even contradict predictions made at the level of physics. There are entities that 'exist' within a chemical description of 'reality' that could never be explained in terms of atoms, say.

The fact that each science has facilitated such enormous strides in our ability to rationally affect our environment suggests that, though they may be based on incomplete ontologies, they are in fact very good partial reflections of 'reality'. The hierarchy of sciences is sustainable and justifiable, but is not an accurate representation of what is. However, the assumption that the physical matter the sciences attempt to explain organizes into a nested hierarchy would seem to be questionable. Regarding our hierarchy of 'explanations' as convoluted rather than nested would be more appropriate in light of the CA model.

Does level of abstraction correspond to degree of realism?

Given that as we move away from the absolute CA reality information is lost, it would be tempting to suggest that the 'entities' that 'exist' at subsequently higher levels of description, though substantially real, are increasingly less real. If our descriptions at one level were always tied to descriptions at lower levels then this argument would be supportable. But given that particular scientific communities will often operate with little regard to the ontological and epistemological standards of another scientific community, it is quite possible that a macro-explanation contains entities that are more real than those comprising, what seems to be, a micro- explanation. If this wasn’t the case and we did indeed tie ourselves to purely quantum physical explanations, say, it is quite possible that, as a result of the multiple abstractions that would be necessary to get from quantum to social reality, we would learn next to nothing about that particular 'reality' (as we would have a partial description of a partial description of a partial description…).

The fact that we are comfortable with ignoring quantum descriptions and approaching 'social reality' in terms that seem more familiar to us (after all our human perceptions have been honed to give us rather good tools for dealing with this particular level of reality) enables us to make rather more progress in regard to the development of practical understanding for manipulating social reality.

In short, a hierarchy of vertical ontologies can be inferred that, though they may actually be inconsistent (or even, incommensurable) with each other, they can still be incredibly useful in providing practical understanding. The hierarchy of physical reality is not a nested hierarchy like the hierarchy of the sciences has been traditionally regarded.

Multiple Filters and Horizontal Ontologies

Given the fact that the process of filtering-out pattern bases to reveal 'macroscopic' dynamics works so well in the example above, it would be tempting to assume that even though higher-level descriptions are incomplete, there is in fact a 'best' way to obtain these descriptions. So although the discussion thus far supports the assertion that the recognition of emergent products is tied to our choice of methodology, it also supports (or at least it does not deny) the possibility that one particular methodology is better than all others. I would like to briefly challenge this possibility next. This section of the chapter represents a move away from the more scientifically-based philosophy presented thus far to a more speculative philosophy.

As we move up our constructed hierarchy of explanations, the reality that is 'explained' by our explanations seems to be more complex. By complex here I mean that we seem to have increasing difficulty in locating the boundaries, or the 'quasi-particles' that describe our systems of interest. This issue is perhaps exemplified at the 'social level', and more clearly illustrated by the methodological diversity of the social sciences. Whereas recognizing the 'atoms' of the physical sciences is seemingly a rather straightforward affair (though still only approximately representative of what is), the recognition of the 'atoms' of social science seems rather more problematic. There are many more ways to 'atomize' social reality in an effort to explain a particular phenomenon (of course our choice of 'atomization scheme' determines the phenomena that then takes our interest, and to some extent, vice verse - although there are potentially many different (possibly contradictory) 'atomizations' that may lead to seemingly good explanations for the same phenomena). This is probably why social problems are often described as 'messy' problems18; the articulation of the system of interest itself is problematic, let alone the development of explanations. The fact explanations of social phenomena often have low 'fitting coefficients' whereas explanations of physical phenomenon often correlate very well indeed with perceived (measured) reality, may further suggest the legitimacy of multi-methodological approaches in the social sciences7.

I don’t want to spend much time at all defending the view that multi-methodological approaches for the social sciences are a must. For now I am content to point the reader towards the book Multimethodology13 which argues rather well for a pluralist attitude in the social sciences. I think that the failed attempts in the past to develop a 'Theory of Society' satisfies me, for now at least, that pluralism is a feature of the social sciences rather than a failure of social scientists to construct such an all-embracing theory8.

Figure 5. The convoluted hierarchy of existence. Each ellipse represents the set of 'entities' (a theory’s alphabet) that supposedly account for certain phenomena at a certain 'level'

The different explanations of the same phenomena offered in the social sciences often rely on different ontological assumptions. Given our historical tendency to rank theories in terms of hierarchies of physical matter, we might ask ourselves as to how we might go about ranking social theories. In other words, are the entities used to construct explanation A more real that those entities used to construct explanation B? I very much doubt that a consistent answer exists for this particular question. The reason this issue is raised here is simply to support the suggestion that as we abstract away from the fundamental substrate of existence (level 0) towards the more familiar social level we experience a kind of ontological explosion (see Figure 5). This explosion occurs in explanation-space and represents the theoretical diversity inherent in our attempts to explain phenomenon in our terms rather than the Universe’s terms (whatever they might be). As the explosion grows in intensity we find that there are many more legitimate ways to approach our explanation building. Each approach relies on different ways to recognize the 'atoms' of what is and how they interact with each other, i.e., each approach recognizes the products of emergence in a different way. Each approach is only capable of explaining certain aspects of the phenomenon of interest, encouraging the increased need for pluralist approaches. The notion of a 'level' becomes rather vague, as does the notion of a hierarchy; even a hierarchy of explanations is challenged.

Taking this need for pluralism to its extreme leads us into the realms of relativistic philosophies, as opposed to the realist philosophies that tend to reign in the physical sciences. So how do we decide which are the 'best' approaches that lead to the 'best' theories? How do we avoid the choice of 'filter' from being completely arbitrary? In the physical sciences consistency with currently accepted theories is often used as an additional filter. Equally important is partial validation through experimentation, and limited empirical observation. Physical scientists are rather fortunate in that many of their systems of interest can be isolated to such a degree that the scientists can actually get away with the assumption that the systems are indeed isolated. This helps a great deal. In the social sciences there is a more 'try it and see' attitude as the application of reductionist methodologies is highly problematic, because of the considerable difficulties in reproducing the same experiment time and time again. Often new situations, or new phenomena, force the social scientist back to square one and a new 'explanation' is constructed from scratch with minimal consideration of what theories went before (although what went before may prove inspirational).

The fact that pattern-basis filtering of complex CA leads to alternative representations of the CA reality that account for a good percentage of the systems behavior would seem to suggest that an 'anything goes' relativism in particular contexts is not supportable. The success of physics itself at least supports the notion that some theories are considerably better (in a practical sense at least) than others. However, the fact that these alternative representations are incomplete would suggest that an 'anything goes' relativism might be valid for all contexts taken together at once. Once a pluralist position is adopted, the key role of critical thinking in taking us from a universal 'anything' to a particular 'something' becomes apparent.

I do not want to explore any further the epistemological consequences of CA research as this paper is primarily concerned with the ontological status of emergence products (which, as has been indicated, is impossible to explore without concerning oneself with epistemology). If our commitment to a CA model of the Universe is to persist then we need to find CA systems, and the tools for analyzing them, that when abstracted multiple times (to 'higher levels' of existence) lead to representations that can be usefully 'atomized' and analyzed in a variety of different ways (analogous to the social sciences).

A note on intrinsic emergence

Before closing this chapter with some concluding statements I’d like to mention the notion of intrinsic emergence introduced by Crutchfield5 as it offers a computational-based explanation as to why some theories / perspectives / abstractions / etc. are better than others and why we should resist an "anything goes" relativism. According to Crutchfield5 , "The problem is that the 'newness' [which is referred to as 'novelty' herein] in the emergence of pattern is always referred outside the system to some observer that anticipates the structure via a fixed palette of possible regularities." This leads to the relativistic situation mentioned above. Although there is clearly a relativistic dimension to pattern recognition (and therefore theory development) how might we resist a radical relativism (that would suggest that anything goes in any context all the time)? Crutchfield5 goes on to say, "What is distinctive about intrinsic emergence is that the patterns formed confer additional functionality which supports global information processing... [T]he hypothesis ... is that during intrinsic emergence there is an increase in intrinsic computational capability, which can be capitalized on and so can lend additional functionality." Another way of saying this is that, if we privilege a computational view of the Universe, the structures that emerge support information processing in some way - they increase the computing ability of the Universe. This would suggest that 'real' patterns are those patterns which can be linked to some computational task. For example, considering Figure 2 again, if a filter has been chosen that was not based upon the pattern basis then a pattern would still have been uncovered, but it is unlikely that, that pattern would have any relevance to the computational capacity of the system - the odds are the pattern would also appear quite disordered. This of course opens up the possibility that the best 'filters' are those that lead to regular patterns, but given that assessments of regularity (or disorder) lie on a spectrum how do we decide which patterns are sufficiently regular to allow us to associate with them some functional importance? Even though certain patterns play a functional role within the Universe (which suggests in absolute terms that not all patterns are 'real'), it does not follow that we, as participants in the Universe, can unambiguously determine which patterns are significant and which are not. It is not even clear if an 'outsider' (God, perhaps) could achieve absolute clarity either.

The notion intrinsic emergence shows that the patterns that we might consider 'real' allow the system of interest to process information in some way, suggesting that certain patterns are internally meaningful and not arbitrary. However, it does not follow that just because some patterns a more 'real' than others we can determine which are which. In a universal and absolute sense, not all filters and patterns are made equal and certain filters (and their consequent patterns) are more meaningful than others. In the absence of direct access to absolute and complete descriptions of reality, however, an 'anything goes' relativism (such as Feyerabend’s) cannot be dismissed on rational grounds alone and offers the possibility of a more genuine engagement with the (unseen) 'requirements' of certain contexts.

Summary and Conclusions

In summary I’d like to 'atomize' (and therefore abstract in an incomplete way) the assertions made herein concerning the nature of emergents:

- Emergent products appear as the result of a well-chosen filter - rather than the products of emergence 'showing' themselves, we learn to 'see' them. The distinction between macro and micro is therefore linked to our choice of filter.

- Emergent products are not real though "their salience as real things is considerable..."6 . Following Emmeche et al.7, we say that they are substantially real. In a limited sense, degree of realism is a measure of completeness relative to level 0.

- Emergent products are novel in terms of the micro-description, i.e., they are newly recognized. There is no such thing as absolute novelty. Even an 'idea' has first to be recognized as such. There will be novel configurations of cell states, but no new real objects will emerge, though new substantially real objects can emerge.

- In absolute terms, what remains after filtering (the 'foreground') is not ontologically different from what was filtered-out (the 'background'); what is labelled as 'not-real' is ontologically no different from what is labelled 'real'.

- Product autonomy is an impression resulting from the filtering process.

- The products of emergence, and their intrinsic characteristics, occurring at a particular level of abstraction do not occur independently - rather than individual quasi-particles emerging independently the whole set of quasi-particles emerges together. The hierarchy of explanations correlates only approximately with degree of realism.

- The emergent entities at level n+1 are not derived purely from the entities comprising level n, even if a perfect bootstrapping method that could overcome the problem of intractability was invented.

- Emergent products are non-real yet substantially real, incomplete yet representative;

- Determining what 'macro' is depends upon choice of perspective (which is often driven by purpose) and of course what one considers as 'micro' (which is also chosen via the application of a filter).

- All filters, at the universal level, are equally valid, although certain filters may dominate in particular contexts.

One last remark. Much philosophical debate circles around what the most appropriate filters are for understanding reality. For example, radical scientism argues that the scientific methods are the most appropriate, whereas humanism argues that the filters resulting from culture, personal experience, values, etc. are the most appropriate. An analysis of a CA Universe suggests that it is impossible to justify the privileging of any filter over all others on rational grounds (though there is a way to go before we can say that this conclusion follows naturally from the CA view). Whereas political anarchism is not a particularly effective organizing principle for society at large, a complexity informed theory of knowledge does tend towards an epistemological and ontological anarchism, at least as a Universal default position.

Appendix A: On the Reasonableness of Assuming that the Universe is, at Some Level, Well-Described as a Cellular Automaton

Given that the strength of one’s claims is related (but not necessarily so9) to one’s starting point, I would like to discuss the reasonableness of making the CA-assumption. I would also like to explore briefly what does not follow from assuming the existence of a theory of everything.

Firstly, although some form of CA could possibly turn-out to be the theory of everything, I do not believe that inhabitants of the Universe will ever (knowingly) pin-down the details of such a model - the reason for this being that, due to the nature of nonlinearity (assuming the Universe to be inherently nonlinear), there simultaneously exist an infinity of nonlinear (potentially non-overlapping) models that will provide (potentially contradictory) 'explanations' for all observed 'facts'. Laplacian determinism may well operate, but it certainly does not follow that we can observe this exquisite determinism in any complete sense (though clearly we can to a very limited degree).

Secondly, even if we serendipitously stumbled upon THE theory of everything (though we would never know that we had10), just because we have a theory of everything, it by no means follows that we can derive explanations for every thing. All we would have is a theory of how the fundamental units of existence interact. Bootstrapping from that primary CA substrate to 'higher levels' of existence is considerably more challenging than discovering such a fundamental theory in the first place. As Hofstadter12 remarks:

a bootstrap from simple molecules to entire cells is almost beyond one´s power to imagine. (p. 548)

I argue herein that not only is information lost as we 'abstract' to more familiar 'objects' (which is the very nature of abstraction), but that there is no single foolproof method to select the 'best' abstraction (even if there is such a 'best' abstraction).

So, claiming that the Universe might be well-described at some level as a CA is not as big a claim as it might first seem. In the argument I develop above, the details of such a CA are not really important for the validity of the conclusions I draw. But why start with a CA, even if the details are not particularly essential. My reason is simply parsimony; a CA model is possibly the simplest construction currently known to us that comes close to having the capacity to contain everything - this possibility arises because of the model’s recursive nature. With his Life game Conway has shown "that the Life universe ... is not fundamentally less rich than our own."14.

There are a couple of things we can additionally suggest about such a CA model of the Universe. All CA universes are finite universes and as such certain configurations will eventually repeat. When this happens the Universe would fall into a particular attractor in which a sequence of configurations would be repeated for eternity. The idea that our Universe is currently racing around some high-period attractor cycle seems unlikely (given the novelty we observe11), or at least at odds with our perspective on

things. The phase spaces of CA Universes contain many such attractors, highlighting the potential import of initial conditions12.

If our CA Universe is not living some endlessly repetitive nightmare (claimed by big bang / big crunch models), what might be going on instead? Many of the attractors of CA systems exist at the end of fibrous tentacles of configurations that do not occur on any attractor cycle. Figure 6 illustrates this. These transients are the 'gaps' between the system’s initial conditions and its final attractor. These transients can have very complex structures and can even support life-like behavior. So here is the CA Universe; from a range of initial conditions (all of which could lead to a statistically identical evolution) the Universe would pass through an inordinate number of unique configurations which would never be repeated again, before it finally settled into a fixed pattern of cycling configurations in which no such novelty would ever exist again (unless of course the rules of the game were changed or some external force pushed the system back onto one of the transient branches - which would of course simply result in a partial rerun). We are quasi-entities living on a transient branch of a CA attractor!

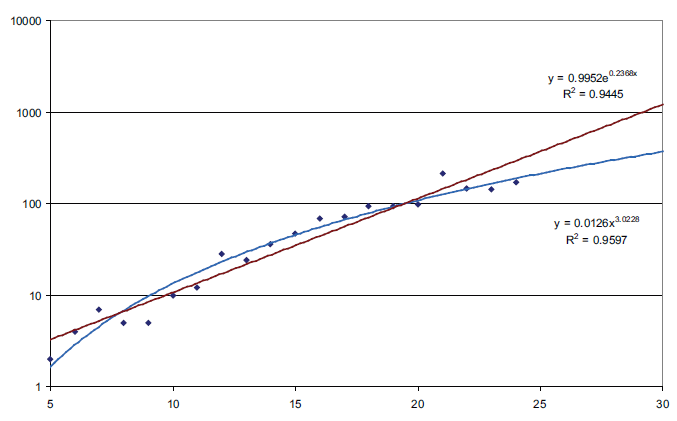

It would seem that in addition to long transients such a CA would also have to display complex transients, as opposed to ordered or chaotic. Wuensche19 defines complex CAs as those with medium input-entropy and high variance. An example of a CA displaying complex transient dynamics is rule &6c1e53a8 (k=5). The space-time diagram and the phase space for this rule are shown in Figure 7.

Though it by no means demonstrates the validity of the CA-hypothesis in itself, an interesting calculation might be to determine the size of CA needed that would have transients at least as long as the time the universe has existed thus far. We’ll consider the rule above for this little exercise. Let’s assume that one time-step in our CA Universe is equivalent to the small measure of time known as Planck Time13. Figure 8 plots the maximum transient length for rule &6c1e53a8 for increasing CA size (increasing n). If we assume that the Universe is approximately 15 billion years old (or 4.7 × 1017 s) then we require transients at least 8.77 × 1060 steps long. If we approximate the relationship between maximum transient length and network size shown in Figure 8 by a power law and extrapolate to 8.77 × 1060 (which is a rather extreme extrapolation I know) then we obtain a colossal value of e11050 for n, the network size, whereas we get a value for n of 600 if we assume an exponential relationship. This number is either outrageously large or small compared to what we might expect. Chown214 estimated the number of distinct 'regions' that might exist to be 1093, so we’re way off that mark! However, it does serve to illustrate

Figure 6. The largest attractor for CA rule &6c1e53a8, n=17, k=5 showing complex transient trees of length 72 steps. The period of the attractor cycle is 221 steps

Figure 7. A section of the space-time diagram for CA rule &6c1e53a8, n=17, k=5, and the complete attractor field which contains 54 attractors (21 are qualitatively distinct - only these are shown)

that CA may be 'complex' enough to support the 'existence' of complex entities and maybe even 'Everything'. A simple 2-state CA of only 600 cells can have transients as long as 1061 steps containing no repeated configurations. This does not confirm that such a system could contain exotic quasi-entities such as humans, but Hansen and Crutchfield (1997) have at least confirmed that simple hierarchies of interacting quasi-particles can be uncovered using appropriate filters. Chou and Reggia (1997) have even found that constructing CAs that support self-replicating structures is a surprisingly straightforward exercise. It would be interesting to perform the same calculation for CAs with cells that can take on more than 2 states to see if network sizes of 1093 can be obtained, although there is no reason to assume this estimate to be correct in the first place.

Footnotes

if a power law is assumed, or:

if an exponential law is assumed.

References

1. Bedeau, M. (1997) "Weak emergence," Philosophical perspectives, 11: 375-399.

2. Chown, M. (2000) "Before the Big Bang," New Scientist, 166(2241): 24.

3. Corning, P. (2000) "The re-emergence of 'emergence': A venerable concept in search of a theory," Complexity, 7(6): 18-30

Figure 8. Maximum transient length versus network size for CA rule &6c1e53a8, n=5- 25, k=5

4. Corning, P. (2000) "The emergence of 'emergence': Now what?" Emergence, 4(3): 54-71.

5. Crutchfield, J. P. (1994) "Is anything ever new" Considering emergence," in G. A. Cowan, D. Pines, and D. Meltzer (eds.), Complexity: Metaphors, Models, and Reality (pp. 515-537). Cambridge, MA: Perseus Books.

6. Dennett, D. C. (1991) "Real patterns," The Journal of Philosophy, 88: 27-51.

7. Emmeche, C., Koppe, S. and Stjernfelt, F. (2000) "Levels, emergence, and three versions of downward causation," In P. B. Andersen, C. Emmeche, N. O. Finnemann, and P. V. Christiansen (eds.), Downward causation (pp. 13-34). Aarhus, Netherlands: Aarhus University Press.

8. Feyerabend, P. (1975) Against Method, London, UK: NLB.

9. Feyerabend, P. (1999) Conquest of Abundance: A Tale of Abstraction Versus the Richness of Being, edited by Bert Terpstra, The University of Chicago Press: Chicago.

10. Goldstein, J. (1999) "Emergence as a construct: History and issues," Emergence, 1(1): 49-72.

11. Hansen, J. E. and Crutchfield, J. P. (1997) "Computational mechanics of cellular automata: An example," Physica D, 103(1-4): 169-189.

12. Hofstadter, D. R. (1979) Godel, Escher, Bach: An Eternal Golden Braid, New York, NY: Basic Books.

13. Mingers, J., and Gill, A. eds. (1997) Multimethdology: The Theory and Practice of Combining Management Science Methodologies, Chichester, UK: John Wiley & Sons.

14. Poundstone, W. (1985) The Recursive Universe: Cosmic Complexity and the Limits of Scientific Knowledge, New York, NY: William Morrow.

15. Richardson, K. A. (2004) "The problematization of existence: Towards a philosophy of complexity," Nonlinear Dynamics, Psychology and the Life Sciences, 8(1): 17-40.

16. Ronald, E. M. A., Sipper, A., and Capcarrre, M. S. (1999) "Testing for emergence in artificial life," in Advances in Artificial Life: 5th European Conference, ECAL 99, volume 1674 of Lecture Notes in Computer Science, Floreano, D., Nicoud, J. -D., and Mondad, F. (eds.), pp. 13-20, Springer: Berlin.

17. Standish, R. K. (2001) "On complexity and emergence," Complexity International, 9, available at http://journal-ci.csse.monash.edu.au//vol09/standi09/

18. Vennix, J. A. M. (1996) Group Model Building: Facilitating Team Learning Using Systems Dynamics, Chichester, UK: John Wiley & Sons.

19. Wuensche, A. (1999) "Classifying cellular automata automatically: Finding gliders, filtering, and relating space-time patterns, attractor basins, and the Z parameter," Complexity, 4(3): 47-66.