The hegemony of the physical sciences:

An exploration in complexity thinking

Kurt Richardson1

Institute for the Study of Coherence and Emergence, 395 Central Street, Mansfield, MA 02048, USA

Abstract: Traditionally the natural sciences, particularly physics, have been regarded as the Gatekeepers of Truth. As such the legitimacy of others forms of knowledge have been called into question, particularly those methods that characterize the ‘softer’ sciences, and even the arts. This paper begins with an extended discussion concerning the main features of a complex system, and the nature of the boundaries that emerge within such systems. Subsequent to this discussion, and by assuming that the Universe at some level can be well-described as a complex system, the paper explores the notion of ontology, or existence, from a complex systems perspective. It is argued that none of the traditional objects of science, or any objects from any discipline, formal or not, can be said to be real in any absolute sense although a substantial realism may be temporarily associated with them. The limitations of the natural sciences is discussed as well as the deep connection between the ‘hard’ and the ‘soft’ sciences. As a result of this complex systems analysis, an evolutionary philosophy referred to as quasi-‘critical pluralism’ is outlined, which is more sensitive to the demands of complexity than contemporary reductionistic approaches.

“No human being will ever know the Truth, for even if they happened to say it by chance, they would not know they had done so.” Xenophanes

“If you see things as they are here and now, you have seen everything that has happened from all eternity. All things are an interrelated Oneness.” Marcus Aurelius

Introduction

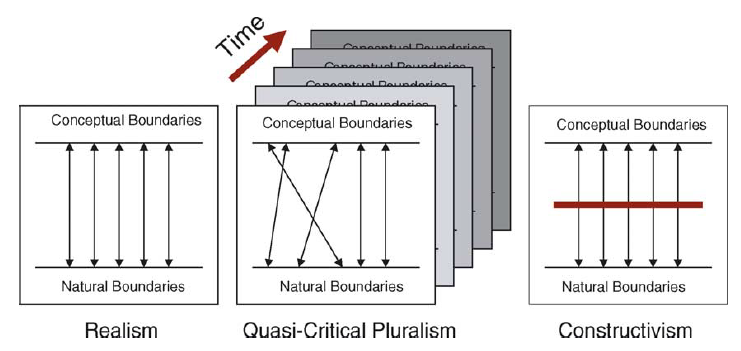

Realism versus constructivism

There are at least two broad perspectives from which the status of our scientific knowledge claims can be understood. The first is a purely realist view of scientific knowledge, referred to as scientific realism. According to this view the “theoretical entities that are characterized by a true theory actually exist even though they cannot be directly observed. Alternatively, that the evidence that confirms a theory also serves to confirm the existence of any theoretical or ‘hypothetical’ entities characterized by that theory” (Fetzer and Almeder [17: 118]). This definition suggests that scientific knowledge gives us direct knowledge of entities that exist independent of the existence of any observer, i.e., rigorous application of scientific methods yields theories of certain entities that exist mind-independently (independently of what we believe or feel about those entities). In this view an objective reality does exist, and that it is through the application of method that we can have objective scientific knowledge of ‘reality’. In complete opposition to the realist position is idealism. This position argues that, though there does exist an objective reality, we can never have direct objective knowledge concerning that reality. Accordingly, knowledge is manufactured rather than discovered. The manufacturing process is inherently biased by our methods of production and is incapable of delivering objective knowledge of some external reality: objectivity becomes no more than a myth. Social constructivism, which is a form of idealism, in its extreme form regards scientific knowledge as merely a socially-constructed discourse that is inherently subjective in nature. As there can be no objective knowledge, there can be no dominant discourse because there can be no test or argument that could conclusively support the dominance of one discourse over another. As such, science is just another approach ‘out there’ to making sense and should be treated with no more reverence than any other approach. As Masani [30] laments, “constructivism is anti-scientific to the bone.”

The relationship between language and objective reality

An alternative way to distinguish between realism and idealism is to consider the relationship between the language we use to describe reality and reality itself. Realists argue that there is a one-to-one correspondence between our language and reality. This leads to a number of interesting consequences like, for example, the belief that there is a best, or universal, language for describing reality and that that language happens to be the language of science, namely mathematics and logic. Idealists, specifically relativists, on the other hand argue that there is no relationship whatsoever between our language and reality. The terms or labels we use are no more than useful sense-making tools that, though convenient, have no intrinsic basis in some notional objective reality. Though I do not believe that anyone who supports either of these positions is naïve enough to believe in them wholeheartedly, this is generally how the debate between realism and idealism is set up. Physical scientists are criticized for their intellectual arrogance/imperialism, which is justified through strongly realist beliefs, and idealist critics are ridiculed for their apparently wild and poorly argued descriptions of what they think science actually is as well as their omission of reality in their theories.

The hegemony of the physical sciences

Primarily because of the success of science-driven technology, there is an enormous wealth of evidence that supports the privileging of scientific discourse over every other. This success has perhaps blinded us to the shortcomings of the scientific process and has lead to an unquestioned belief that because science has successfully explained so much it can probably explain everything. Every facet of human life can supposedly be productively examined through the eyes of science. This position is commonly referred to as scientism (though practical science—as opposed to some popularized caricature of science—is not synonymous with scientism). And, though indirect evidence of these shortcomings is becoming more widespread, putting the brakes on the train of scientism is no trivial undertaking. Often the failures of science, which are considerable when we consider social planning or environmental policy, are put down to the bad application of reductionist methods rather than seeing these failures as the result of applying reductionist methods to inappropriate subject matter.

Contrary to popular belief science is not capable of considering all phenomena. In fact, it is quite inflexible in its requirements. The principal requirement that will be considered herein is that scientific methods require that the object of interest is stable, i.e., the boundaries (or, patterns) that delimit the object from the ‘background’ (the objects complement) must be stable and assumed to be real. This stability allows repetitive examinations to be undertaken that allow the knowledge concerning that object to be refined and tested so much so that our confidence in our knowledge of that object becomes so great that we might begin to unquestionably assume that we have an accurate description to hand. In a more generic way, what I am saying is that scientific knowledge can only be obtained for contexts which are incredibly stable. This approach yields a tremendous amount of understanding that can be turned to the development of cars, computers, building methods, etc.—just about anything that can be constructed from parts that behave qualitatively in much the same way whatever context they are placed within. What about objects of interest that have far less rigid boundaries? Social systems, for example, change and evolve. The boundaries, or patterns, that describe such systems continuously change and emerge such that the extraction of uniformities is far from a trivial matter. By their very nature, the context changes and repetitive examinations are at worse impossible, and at best highly problematic. To apply reductionist science to such systems we have to fake stability. We are forced to reduce the system of interest to an idealized caricature that remains steady over time. Of course this is what we really do when we look at any system, be it an atom or an ecology, but for some reason our reductions seem to be more harmful when considering ecologies (i.e., complex systems) as the relationship between the description that would allow a scientific analysis and a notional ‘real’ description is gaping.

These cracks in the scientific façade have been made more apparent with our ability, supported through incredible growth in computer power (and, ironically, through the dogmatic application of reductionist science) to construct models of simple2 complex systems. The emerging science of complexity forces us to revisit the nature of scientific knowledge and at the same time presents us with an alternative approach to understanding the limits of scientific methods. The interest for me personally is that, though many criticisms of science have been made by those whom the scientific community has regarded as outsiders and non-scientists, complexity science leads to a critique of science couched in the language of science itself. In a sense, the scientific language contains within it the seeds of its own limitations.

The aims and scope of this paper

The starting point for my discussion is to define what an abstract complex system is and then to assume that the Universe is well-described as a complex system at some arbitrarily deep level. From this starting point I will argue that no one discourse should unquestionably dominate all questions of knowledge, even though a purely realist foundation can still be sustained. The resulting philosophy may be called quasi-‘critical pluralism’. This position lies somewhere between the realist and idealist positions already discussed (despite its realist foundations), neither denying the potential of any particular perspective to yield useful understanding nor unquestionably privileging one select perspective over all others for all contexts. In some ways the resulting philosophy is quite empty. My hope is that quasi-critical pluralism will be seen as a natural conclusion from the realist assumptions of complexity. If, for example, postmodernist type arguments might be ‘derived’ scientifically then perhaps the current stalemate in the philosophy of science, fueled by a stubborn polarization between extremes, might be alleviated allowing an honest and humble exchange of ideas to occur.

In addition to this I had like to revisit arguments made in Richardson et al. [42] concerning the role incompressibility and system history play in the examination of complex systems, as my understanding of these topics has changed slightly. In some ways this could be seen as an unusual starting point as it assumes, like many of the writings on complexity, that the complex systems perspective, along with its associated set of tools, is legitimate. For example, it is often assumed that the complex adaptive system (CAS) view of organizations is the best. Often the justification for this assertion is the superficial similarities between the appearance of a real organization and an idealized CAS. So the paper begins with an exploration of complex systems in terms of their more common notions. However, the bulk of the second half of the paper discusses the legitimacy—or not—of utilizing any paradigm, let alone a complexity-based view (interestingly though, a complex systems view is employed to make this point). The paper could quite easily have begun in the middle with the discussion concerning the legitimacy of ontological shifts, and then moved on to discuss the details of the prevailing complex systems perspective.

What is a complex system?

A complex system is comprised of a large number of non-linearly interacting non-decomposable elements.

This is the simple definition of a complex system that is often given and is very similar to Langefors [28: 55] definition of a general system:

“A system is a set of entities with relations between them”

or, Van Gigch’s [50: 30] definition:

“A system is an assembly or set of related elements.”

If ‘non-linearly’ is inserted before ‘related elements’ then we end up with a very similar definition to the one given at the beginning of this section. As Backlund [3] has pointed out these sorts of definitions are incomplete. To ensure that the system we are interested in may not be simply reduced to two weakly interacting systems then we must also add that the systems’ components are connected in a way that prevents our system of interest being reduced to two or more distinct systems.

In addition to this particular shortcoming, the connectivity of the system must be such that the system displays behaviors associated with complex systems before it can be labelled as such. For example, as we shall discuss in the next section, complex systems display emergent properties, as there exists a non-trivial relationship between the system components and the system’s macroscopic properties. In my past endeavors to understand complex systems I have tried to develop, on a number of occasions, a typology that would clearly distinguish between complex and complicated systems; one that would hold up to scrutiny. One particular way of doing this is to regard complicated systems as much the same as complex systems except not having appropriate connectivity to display complex behaviors, such as emergence. However, when trying to conceive of a boundary between these two categories one quickly finds that it is very hard indeed, if not impossible, to develop a sound division that could be applied in all cases. What I quickly came to conclude was that the division between complicated and complex depended critically upon how the system was connected. Depending upon how the complicated system was put together, it may be that only a few new connections would be sufficient to transform it into a complex system, or, maybe a relatively large number of connections would be required to make the complicated system display emergence, for example. It should be noted that the complicated/complex distinction is not equivalent to the commonly used linear/non-linear distinction. Complicated systems may contain many non-linear interactions (e.g., computers are commonly regarding as examples of linear systems despite the fact that they comprise a vast number of non-linear responsive components such as transistors), they may even display limited non-linear behaviors. The key difference between the two is the absence of ‘novelty’ in complicated systems3. Complex systems can emerge into states that are not apparent from their constitution; in a sense new states are created4.

The principal difference between complicated and complex system is the presence of causal loops. For a system to be complex it must be connected in such a way that multiple causal loops are present that themselves interact with each other. So it is the qualitative design of the connectivity that allows, or not, complex behaviors. But again, the determination of a qualitative universal design process is problematic, and to my mind, an impossible undertaking in any complete sense. I believe that the best we can hope for is a method that would allow investigators to identify the causal loops that are primarily responsible for enabling complex behavior, for a particular system only, during a particular time period. From this, investigators could identify ways in which the system could be manipulated to be complicated or complex. But, it is important to bear in mind that such tests would only work for idealized and well-described systems. The benefits that such a test would bring to our understanding of real life systems are not at all clear cut. The difficulties confronting the design of such a testing apparatus will become clear as the paper progresses.

From this brief discussion of the possible definitions of a complex system, it is clear that they are insufficient (after all, the notion of incompressibility explicitly denies the completeness of any definition—Richardson et al. [42]). In an attempt to address these shortfalls we might rewrite the initial definition of a complex system as:

A complex system is comprised of a large number of non-linearly interacting non-decomposable elements. The interactivity must be such that the system cannot be reducible to two or more distinct systems, and must contain a sufficiently complex interactive mixture of causal loops to allow the system to display the behaviors characteristic of such systems (where the determination of ‘sufficiently’ is problematic).5

A rather circular definition possibly? So a complex system is a (topologically complex) system that displays complex behavior! Despite its circularity, which highlights the problematic nature of defining complex systems, this will be the definition assumed from here on, albeit in a loose way.

The relationship between systems and complex systems is not easily unraveled. If we take the above definitions we could argue that a complex system is a special kind of system as by definition the relationships between its parts are non-linear, whereas the nature of the relationships in the definition of a system are unspecified. However, it could also be argued that a system is a special kind of complex

system because one cannot get non-linear behavior from a linear system, but linearity from non-linearity is possible as a special case definitional scope. It would seem that a general complex system is very broad, as all types of systems are potentially accounted for beneath its. However, it could also be argued that non-linear and linear are not the only types of relationship (though possibly in a mathematical sense they are) and the general system definition is more general. I think that it is difficult, if not futile, to attempt to decide which is the more generic definition. The reason I would like to be able to distinguish between the two, though, is to get a feel for the differences between the general systems community and the complex systems community. Increasingly I feel that these are irrelevant concerns, and that the two communities have almost identical aims though they use rather different tools to fulfil those aims.

One way in which I personally distinguish between the two is that general systems theorists traditionally search for homologies in nature, whereas the complex systems community (in my opinion) fully acknowledges the problematic nature of such a goal (because of the potential of chaos to make two similar contexts evolve utterly differently). Another way to express the difference between the two communities would be to say that general systems theorists celebrate similarity, whereas complex systems theorists, or complexologists, celebrate difference; a distinction, incidentally, which is often used to differentiate in broad terms between modernists and postmodernists. The position developed herein begins with the assumption that the Universe is the only complex system (as defined above), the coherent whole, and so everything else is a manifestation of nature’s inherent underlying complexity: everything, without exception, is complex.

Complex system’s behavior

Complex systems as defined above display some very interesting well-popularised behaviours. The two most dominating forces within a complex system are the forces that push the systems towards chaotic behaviour and those that encourage self-organisation; a fight between disorder and order, if you like, much akin to Anaximander’s notion of elements in conflict (“They ‘pay penalty and retribution to each other for their injustice according to the assessment of Time’”, Gottlieb [19: 9]). I tend to argue that despite this apparent tension chaos is actually a result of self-organisation, i.e., a complex system can self-organise into a structure that leads to a chaotic mode of behaviour (hinting perhaps at the possibility of higher-order parameters); it does not follow that self-organisation necessarily leads to order.

In previous articles (see Richardson et al. [41] or Cilliers [10], for example), it has been suggested that complex systems display the following characteristics:

- Their current behavior depends upon their history;

- They display a wide-range of qualitatively different behaviors;

- As already mentioned, the system’s evolution can be incredibly sensitive to small changes as well as being incredibly resilient to large change (and all possibilities in between);

- Complex systems are incompressible, i.e., it is impossible to have an account of a complex system which will predict all possible system behaviours6.

I had like to revisit these observations herein as my position has subtly changed since Richardson et al. [41].

System history7

In Richardson et al. [41], it was argued that a key distinction between complex and complicated systems was that history was of more importance when considering complex systems than it is for complicated systems. The example given as a complicated system was a silicon-based computer, and a social system was given as an example of a complex system. I feel that some comment concerning the role of history as an analytical tool is necessary. The main difference between these two examples is that, for a computer, it is more or less a straightforward issue to determine what its current state is. History as an analytical tool is less important in this case because an analyst could easily account for history’s effect on the computer by directly examining its current state, or configuration (e.g., what software it is currently running).

However, if we take a social system it is nigh on impossible to get such an accurate appreciation of its current state. If we could view its current state directly its future evolution would be quite easy to ascertain (although difficulties associated with non-linearity would still place significant limits on our predictive powers). However, we cannot ever know the current state of each comprising individual (which would require a complete representation of their current epistemic state that would allow us to predict how each person would respond to particular stimuli) and the detailed form of the interrelationships. So, to obtain a clearer picture, or even a very basic picture, of the current state we can examine the system’s history to indirectly develop an idea of what the current state of the system is, thus allowing an informed, though very approximate, prediction to be made about its future. History as an analytical tool is important simply because complex systems are often too opaque to the eyes of the observer; whereas direct observation yields a very accurate picture of a complicated system, such approaches yield little when considering complex systems and so indirect historical methods can be very useful indeed. However, the interpretation of historical data or texts is always open to question, so an appreciation of the current state of a social system based upon its past behavior is always incomplete and bias. It follows that any predictions made from a historical ‘model’ will also be incomplete and biased.

seem inappropriate to talk about it. However, history can be introduced in at least three ways. Firstly, we could mention it explicitly in our definition by suggesting that each non-decomposable entity retains some memory of it’s past. Secondly, we can recognize that the currently observed state (i.e., component states and overall structure) of any complex system is a direct result of its past and so in some sense the past is reflected in the current—this introduces the notion of a system level memory that is not explicitly coded into the system’s components. Lastly, we can recognize that we can never actually have a direct and complete representation of any complex system and that it is our abstractions of a particular system that introduce the idea of local memory. In this case, and as strange as it may seem, memory is not a real feature of any complex system at the level of description offered by the definition above, but a useful concept to help us create useful partial representations. As we shall see later in the paper, quasi-entities can emerge that can exhibit quite different properties from those exhibited by the system’s fundamental components. These quasi-entities can behave in such a way as to appear as if they indeed have explicit memory. So, in short, even if memory is not included in our baseline definition of a complex system, a variety of memories can emerge from within the system in the form of sophisticated quasi-entities.

The role of indirect historical evidence in the development of a limited understanding of the current state of a complex system does not wholly replace the role of direct observational evidence. The argument is simply that history, as an analytical tool, offers another route to the understanding of complex systems over and above that offered by direct methods, whereas for complicated systems a historical analysis would offer little (unless of course you are interested in the overall evolution of computer design (which would be a complex system) rather than the functioning of one particular computer)8.

Qualitatively different behaviors and scale independence

As already suggested herein and elsewhere (see Allen [2], for example), complex systems display a variety of qualitatively different behavioral regimes. However, it should be noted that this phenomena is scale-dependent, i.e., the possible qualitatively different behaviors that one might observe depend upon the scale, or level, one is observing. For example, and it is an extreme example, if one could view a social system down at the level of individual quarks (almost at the ‘bitmap’ level of reality) it would be hard, if not impossible, to recognize the qualitatively different behaviors that manifest at the human scale. So the emergence of (quasi-) hierarchical levels helps considerably when trying to understand complex systems (a topic that will recur throughout this paper).

If one attempted to draw a (snapshot of a) phase space portrait of a complex system, it would only be a representation of the possibilities that might occur at one particular level of aggregation/abstraction. Not only will different phase portraits exist at different levels of aggregation/abstraction, but as the different levels interact with each other (which again is scale-dependent) the phase variables (also called order parameters) that are relevant for the construction of a particular phase portrait at a particular level will change, i.e., different phase variables might best reflect the current state and future possibilities of the system than ones that might have previously characterised the system. Not only does the quantitative nature of the state variables change, but the qualitative nature changes also.

The existence of (quasi-) levels, which we shall return to, certainly facilitates the development of understanding for a complex system. It has been argued that each level displays a substantial realism [16] meaning that there exist solid representations of aspects of complex systems that need not include the whole system; an argument for some kind of soft reductionism maybe? (But, I do not want to give the whole story away too early!). I think that this is a very important point as much of the complexity writings I have come across generally trivialize the process by which it is decided whether or not a particular system is complex, or can be legitimately treated as such. It is also rarely acknowledged that even a complex systems description is still a bounded description—an idealization— and therefore still very much reductionist in nature.

Chaos versus anti-chaos

The perturbations that might cause a reaction within a complex system of interest come from outside that system. This is an important point. When analysts build models of organisations, for example, the key implicit assumption that is made is that the decision- maker is outside of the organisation of interest, i.e., a subject–object dualism is implicitly maintained. Analysts, consultants, decision-makers, etc. look at the system of interest, attempt to understand its current state, and use the resulting model to make predictions that support a particular course of action. In this approach, the analyst decision-maker (etc.) is assumed to be outside the system looking-in, trying to push it in a particular direction. Change can come from two general quarters, from within and from without. Change from within is emergent and often inevitable if perturbations from the outside do not act to affect (via changing component relationships) the emergent process. Change from without comes as a reaction to these external perturbations. The two are not independent of each other.

Whether change comes from within or from without, the overall change to the system is problematic to determine. The overall systems behavior might be radically affected, or the system might absorb any attempt to change and continue relatively unaffected. Though, it is important to remember that attempts to change the system (failed or not) may result in delayed changes despite no apparent immediate reaction. Who knows what chain of events might have been triggered; the seeds for a new possibility might have unintentionally been sown.

This distinction of being ‘inside’ or ‘outside’ the system will be revisited again. It certainly raises some interesting issues regarding how useful one can be being outside, and the relationship between ‘outsider’ and ‘insider’ knowledge.

Furthermore, given that the fundamental assumption of this paper is that there is only one coherent complex system (the Universe), how can we even justify the seemingly innocent assumption that other systems exist that can be treated as such? The belief that systems do in fact exist (such as an organization) in interaction with an environment that can be analyzed as such appears on the surface to be such a plainly obvious assumption to make; so obvious in fact that it is rarely considered to be anything more than common sense.

Incompressibility

Bottom-up limitations

In Richardson et al. [42], it was argued that incompressibility is the ‘showstopper’ for a theory of everything, or a comprehensive theory of complexity, and is the key reason in support of a quasi-‘critical pluralist’ philosophy. However, in presenting this work at recent seminars it was argued that if we consider a cellular-automata-type experiment, for example, then it is a trivial matter to have a complete description of such a complex system. How can the importance of the incompressibility of complex systems be maintained if in fact completely describable complex systems do exist? In taking the example of the cellular automata experiment in which everything about the composition of the complex system is readily known, we can say that complex systems are incompressible in behavioral terms but not necessarily in compositional terms. So what makes such an idealized complex system otherwise incompressible? The showstopper is (computational) intractability, i.e., the inability to predict all future states of the system, despite complete compositional knowledge, without running the system itself. There is no algorithmic shortcut to a complete description of the future. Wolfram [52: 735] suggests that “[c]omputational reducibility may well be the exception rather than the rule,” and that for irreducible (incompressible) systems “their own evolution is effectively the most efficient procedure for determining their future” [52: 737]. This is very similar to Chaitin’s definition for a random number series: “A series of numbers is random if the smallest algorithm capable of specifying it to a computer has about the same number of bits of information as the series itself” [7: 48]. This seems to imply that incompressibility is very closely related to the term Chaitin’s notion of randomness. Does this mean that if a complex system is incompressible then it is random? In a sense the answer is yes. But, whether a complex system is random or not depends strongly on one’s tolerance for noise. If one demands complete understanding, then the system of interest needs to be expressed in its entirety and knowledge could only be obtained by running the system itself. However, if one was less stringent in one’s toleration of ‘noise’9 then maybe patterns could be found that in a rough and incomplete way would allow for a description to be extracted that would indeed be less than the total description and yet still contain useful understanding. Again, we will return to this issue later.

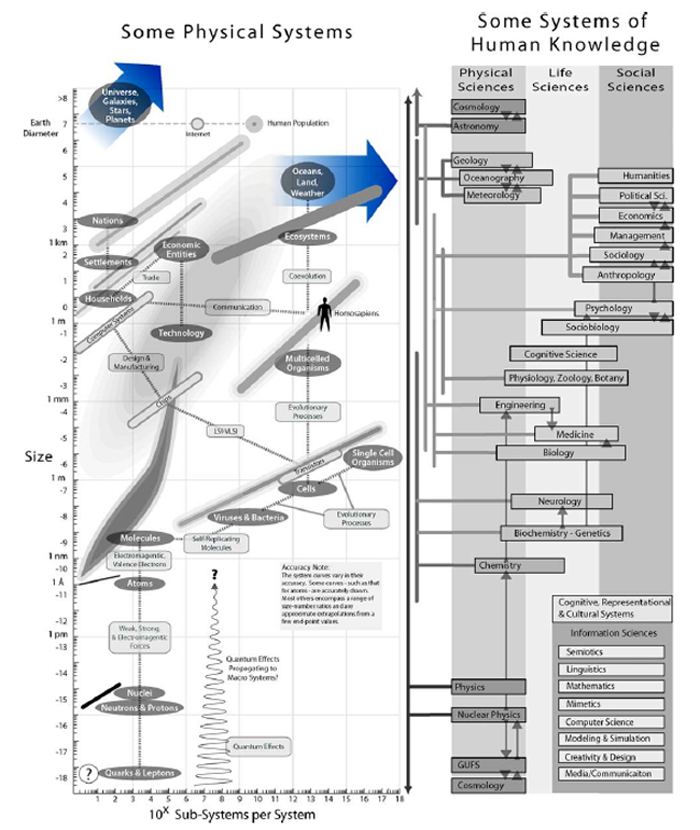

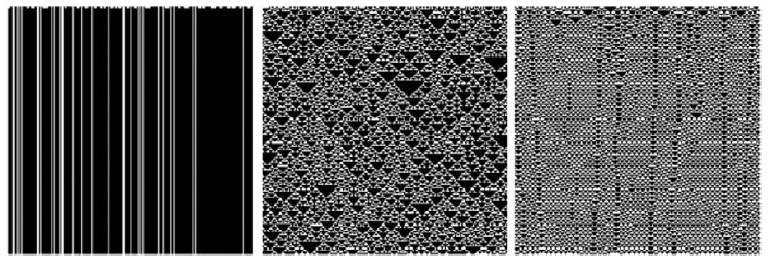

Fig. 1. The reality of hierarchies versus the hierarchy of the natural sciences. (© 2005 Marshall Clemens, www.idiagram.com—used by kind permission.)

An example of the type of incompressibility10, or intractability, described above is the hierarchy of the sciences (Fig. 1). Chemists, for example, provide a description of reality at the molecular and molecular-complex level. Physics traditionally sits below chemistry as being the more fundamental science considering the constituents of molecules, namely, atoms, quarks and maybe superstrings; chemistry supposedly emerges from physics. However, there is a lot more physics in chemistry than physicists actually know about. The ability to bootstrap from physics to chemistry is well beyond current science. The problem is compounded further if we try to bootstrap from chemistry to biology, which deals with cells and multi-cellular entities. As Douglas Hofstadter [21] remarks in Gödel, Escher, Bach “a bootstrap from simple molecules to entire cells is almost beyond one’s power to imagine” [21: 548]. As we move up the hierarchy, more and more of the noise of absolute reality is omitted. In the absence of a complete bootstrapping method, it is simply assumed that the ontology that each science is based upon is legitimate in itself and that the lower levels can be approximately ignored without too much loss of noise. Bottom-up computer simulations approach their subject matter in more or less the same way. An ontological position is assumed, i.e., the nature and form of the basic building blocks are determined, and the macroscopic behaviour that emerges is deemed more life-like because it emerges from a lower-level ontological commitment; on the surface the models look more like reality, so the conclusions must therefore be more robust. But, how secure are their ontological foundations? As with those of the traditional sciences, the strength and basis of these ontological commitments will be explored later. An extended discussion of the nature of hierarchies from a complex systems perspective is offered in Ref. [40].

Top-down limitations

Even if we can have complete compositional knowledge of a complex system (observable at the human level of existence), intractability places some insurmountable limitations on our possible behavioral knowledge. What limitations are placed on us if we do not even have good compositional knowledge? We might begin by viewing the system at a higher level. At higher levels we can recognize alternative sets of interacting entities that ‘exist’ (or are recognizable as such) only at higher levels. For example, we would be insane to investigate organizations at the level of quarks, but we can make good progress by considering employees to be the fundamental building block. It must be remembered, however, that these higher-level entities are emergent aggregates of those ‘existing’ at a lower level. As such, our higher-level abstractions inherently cannot help but miss something out. These abstractions approximate the complex system by assuming the absolute existence of higher levels and therefore higher entities despite the fact that these higher ‘beings’ emerge from the lower levels; the higher-level existence is assumed to be the emergent properties of the level beneath, which is in turn an emergent property of the level beneath that and so on until the fundamental components (superstrings?) are reached.

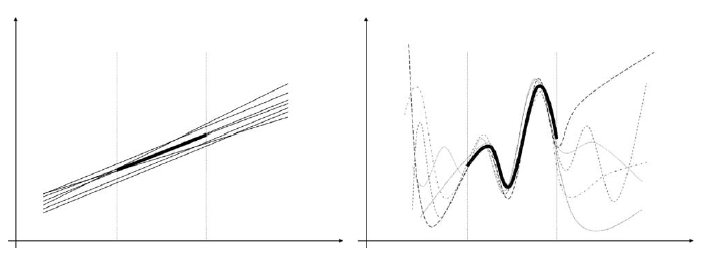

Let us assume for a moment that we could speculate as to the nature of the entities and interactions at the level beneath the level we might be interested in (e.g., we might be interested in how an organization functions, so we build an agent-based model that assumes the employees represent the level beneath the organizational level). If we could achieve this then we could construct a model (a computer-based agent model, for example) of the lower (employee) level, which would have as its emergent properties the next higher (organizational) level. We could then speculate as to the composition of the layer below (multi-cellular?) the lower (employee) level, which would again have as its emergent properties the (employee) layer above. In principle we could continue this process all the way down to the bottom level comprised of the absolutely fundamental components (superstrings?) and relationships. However, how could we be sure that at each stage we had selected the correct abstraction? The only supporting evidence we would have would be limited empirical evidence. But, because of the nature of non-linearity there is a huge number of ways to abstract a problem in such a way that will easily be confirmed by our limited empirical evidence, i.e., there is one way to ‘curve-fit’ a linear problem (assuming a fixed number of dimensions) but there is an infinite number of ways to curve-fit a non-linear problem (see Fig. 2). So, the idea that agent-based modelers have better models (and therefore more knowledge) than other modelers is severely flawed, as in principle there is an infinite number of microscopic sets (i.e., members of the lower level) that would lead to, for a certain range of contexts, the desired macroscopic properties (i.e., the level above). As such we still cannot be sure that our knowledge is transferable to any other contexts except those already observed. There is no doubt that agent-based modelling (which for most intents and purposes are simply advanced versions of simple cellular automata experiments) is an advanced method of analysis, and the analysts’ toolset is significantly enhanced with their inclusion, but we should not get too carried away with the suggestion that they are better models of reality. Their similarities with ‘perceived’ reality might run very shallow indeed.11

Fig. 2. Linear versus non-linear. In both illustrations the thick line/curve within the two vertical lines represents some set of empirical data for an observed range of contexts. The other lines/curves represent predictive data based on different possible theoretical explanations for those empirical data, and extend beyond contexts for which such empirical data exists. The figures illustrate the difficulties in extending current explanations, or theories, of known contexts to new, as yet unobserved, contexts.

So back to our basic question: “how do we know we’ve chosen the right abstraction?” The short answer is that “we can’t.” But this is not a showstopper by any means for analysis, science, or any intellectual endeavor.

In short, the limits to understanding complex systems come not only from our inability to bootstrap from one level to another in either direction, but also from the fact that the only complete description (if that is what we demand) must be constructed from absolutely the bottom-up (i.e., from universal superstrings upwards) rather than from the top, or middle, down. This does not deny the possibility of developing useful and relatively robust knowledge from starting points other than the consideration of everything. In a simple cellular automata experiment, for which perfect compositional knowledge is known, the future development can only be determined fully if the model itself is run. If we start with limited knowledge of some future development we can never be sure that a model obtained by working backwards will be accurate; we must have complete knowledge to build a *complete *model—a theoretical as well as a practical absurdity.

We will revisit this topic when we come to consider the Universe as such a cellular automata experiment and the limits of scientific knowledge, as well as all other forms of knowledge.

In the argument presented thus far, there has been an undercurrent emerging concerning the nature of boundaries, i.e., the ontological status of boundaries, structures or patterns. Further exploration of the nature of boundaries, and therefore the ontological status of ‘objects’ or ‘entities’, will be the foundation from which an evolutionary philosophy will be constructed and legitimized.

The ontological status of boundaries

This section will discuss the nature of boundaries in a complex system, or the ontological status of objects/entities. Initially, the nature of boundaries from a spatio-temporal perspective will be discussed, followed by an exploration of the boundary concept from a phase space perspective. These two perspectives will then be used to argue for a position in which no boundaries really exist in a complex system (except those defining its comprising components), but that a distribution of boundary (structure) stabilities exist which legitimates a wide range of paradigmatically different analytical approaches (without the rejection of natural science methods). However, in this part of the analysis assumptions are made about the efficacy of variables and their non-linear interrelationships that are not necessarily justified. In what seems to be a back-to-front way, the second part of this section will briefly consider how it is that we can even make such claims about the relationship between modelled boundaries and natural boundaries. The discussion here will be brief, as it will be expanded upon in the section that considers the Universe as a vast cellular automata experiment. I also hope that the choice of presenting these ideas in a seemingly back-to-front manner will also become clear.

Emerging domains

If one were to view the spatio-temporal evolution of an idealized complex system, one would observe that different structures, or patterns, wax and wane. In complex systems different domains can emerge that might even display qualitatively different behaviors from their neighboring domains. A domain is simply defined herein as an apparently autonomous (possibly critically-organized) structure that differentiates itself from the whole (i.e., it stands out from the noise). The apparent autonomy is illusory though. All domains (patterns) are emergent structures that persist for undecideably different durations. A particular domain, or structure or subsystem, may seem to appear spontaneously, persist for a long period and then fade away. Particular organizations or industries can be seen as emergent domains that are apparently self-sustaining and separate from other organizations or industries.

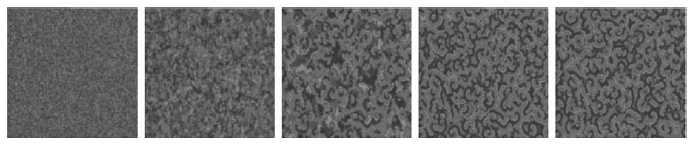

Fig. 3 illustrates the spontaneous emergence of order in a simple complex system (the mathematical details of which are not relevant for this discussion). Different domains emerge whose ‘edges’, or boundaries, change and evolve as the system evolves. Though a snap-shot of the systems’ evolution would show clear structures, it would be wrong to assume that such structures were a permanent and real feature of the system; the structures are emergent and temporary.

Fig. 3. The emergence of domains (or sub-systems).

Though it is argued here that all boundaries are emergent and temporary, some boundaries may persist for very long periods. For example, the boundaries that delimit a proton (which is arguably an emergent manifestation of the combined interactive behavior of quarks, or superstrings) from it’s complement, persist for periods theorized to be longer than the current age of the Universe (possibly > 1033 s), after which the boundaries decay (through the emission of an X particle) and a new set of boundaries emerge (a positron and a pion, which then decay into three electromagnetic showers). Not all boundaries are so persistent and predictable in their evolution. The boundaries that describe an eddy current in a turbulent fluid (which could be seen as the emergent property of the liquid’s constituent molecules) are short-lived. Most boundaries of interest in our daily lives exist somewhere in between these two extremes. The boundaries that define the organizations we work within, those (conceptual) boundaries that define the context(s) for meaning, the boundaries that define ourselves (both physically and mentally) are generally quite stable with low occurrences of qualitative change, although quantitative change is ubiquitous.

It is also important to remember that the observation of domains, and their defining boundaries, depends upon the scale, or level, one is interested in (which is often related to what one wants to do, i.e., ones purpose).

An example of persistent boundaries and resulting levels again comes from the natural sciences, which has obvious direct connections with the hierarchy of sciences discussed earlier. The hierarchy of quarks→bosons and fermions→atoms→molecules→cells→etc. is very resilient (especially at the more fundamental scales). Choosing which level to base our explanations within is no easy task, particularly as any selection will be deficient in some way or another (refer back to the discussion on top-down or bottom-up representation).

At the level of quarks (even if we could directly observe that level), say, it would be difficult to distinguish between two people, though at the molecular level this becomes much easier, and at the human level the task is beyond trivial (though we are increasingly at risk of believing what we see is what there is simply out of habit). The level taken to make sense of a system depends upon the accuracy required or the practically achievable. Organizations (economic domains or subsystems) are very difficult, if not impossible, to understand in terms of individuals so they are often described as coherent systems in themselves with the whole only being assumed to exist12.

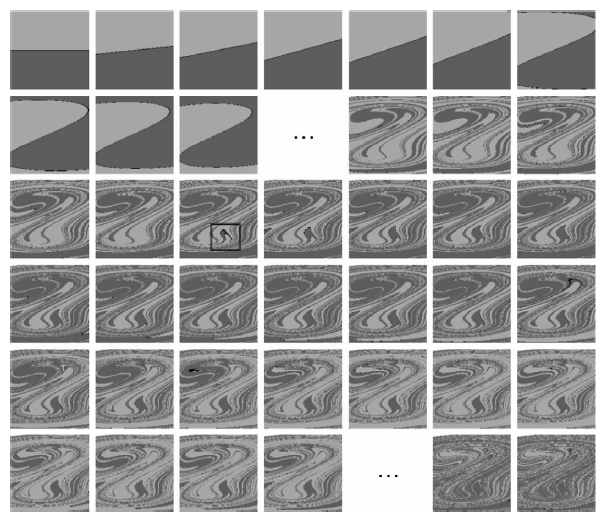

Fig. 4. The emergence of qualitatively stable behaviours.

In short, the recognition of boundaries is problematic and is related to the level of aggregation (scale) we choose to view or are capable of viewing.

Evolutionary phase spaces

The emergent domain aspect of complex systems is complexified further when the behaviors of different domains are included. Let us assume for the moment that we are interested in a particularly stable domain; a particular organization for example. We might perform some kind of analysis, a cluster analysis for example, that allows us to extract or infer, in a rough and incomplete way, a number of order parameters (i.e., parameters that when changed, change the domain’s behavior) and their interrelationships that seem to characterize the observed domain’s behavior. We can then draw a picture of the domain’s phase space, which will provide information regarding the qualitatively different modes of behavior of that domain for varying time. Fig. 4 shows the evolution of such a phase space for a very simple idealized non- linear system. The two main variables are position (y-axis) and velocity (x-axis) and the two dominant shades represent the two main attractors for this system (black represents an unstable equilibrium attractor). So on the first snapshot (taken at time=0), depending on what the initial values of the order parameters are, the system is either attracted to the attractor represented by the light grey or the attractor represented by the dark grey.

The proceeding snapshots show how the phase space evolves with the two qualitatively different attractor spaces mixing more and more as time wears on. What we find for this particular system is that, though we know that there are two distinct attractors, after a relatively short period the two attractor spaces are mixed at a very low level of detail indeed. In fact the pattern becomes fractal, meaning that we require infinite detail to know what qualitative state the system will be in. Even with qualitatively stable order parameters qualitatively unstable behavior occurs (see, for example, Kan [26]). These are referred to as ‘riddled states’, or ‘riddled basins of attraction’, Sommerer and Ott [46].

Despite this continuous mixing of states, stable areas of phase space do emerge and persist. Fig. 4 shows an example of this by highlighting the emergence of a stable region that persists to the end of the modelled evolution. This is of interest because it demonstrates that not only is quantitative prediction problematic but that qualitative prediction is also problematic (as opposed to being impossible—which is what a number of naïve chaoticians claim). But remember that the example given is for a stable domain with qualitatively stable order parameters. For a domain, that is an emergent property of a complex system having other emergent neighbors, the order parameters will not necessarily be qualitatively stable. The defining order parameters might be qualitatively unstable. (This demonstrates that the order parameters are simply trends that offer a superficial (though often useful) understanding of any real system of interest.) The evolution of these phase variables will depend upon the interaction between the neighboring domains, which is a manifestation of causal processes at the lower levels (an argument for meta-order parameters perhaps). This introduces non- trivial difficulties for any observer’s attempts to make sense, i.e., derive robust knowledge. The fact that such change is not random, with the existence of stable structures as well as behaviors, means that the possibility of deriving useful understanding is not wholly undermined.

Before moving on to briefly consider simple cellular automata and Conway’s Game of Life, a terminological link must be made between the above discussion on boundaries/domains and objects/entities if it is not already apparent. Domains are objects or entities. In this analysis a proton, a tree, a car, a nation state, are legitimate objects that are identified as being persistent and apparently autonomous phase space (as well as spatio-temporal, which is how they are ordinarily recognized) domains or patterns.

But, and it is an important but, how can we justify not only this leap from persistent structures to everyday objects but also that phase variables or order parameters should have any basis in the real world at all? This directly addresses the issue of whether mathematics, or any structured (not necessarily formal) language, has any rights at all in claiming that it can be used to represent all of reality. To explore this concern we turn to cellular automata—the simplest form of all possible complex systems.

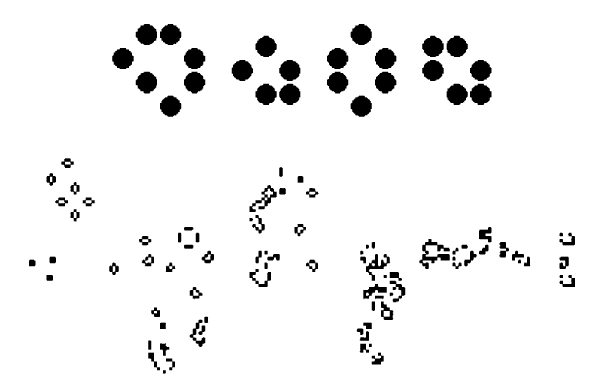

Cellular automata

It is not my aim to provide a course in cellular automata (CA) herein, but a brief introduction may serve useful. In a cellular automata world every ‘pixel’ is described and accounted for. No shortcuts are taken to approximate the CAs overall behavior; everything is described and modelled in exact detail. Fig. 5 depicts the evolution of a selection of simple 1-dimensional CA worlds. The first line in each image depicts the starting point for the CA world; every point in that world is described completely. In these examples each world consists of 200 entities that can each take on a black or white state and are each related to their neighborhood through a very simple (non-linear) rule; the fundamental ‘Law of Physics’ for that world, there are no hidden variables or such like. Each subsequent line shows how each world evolves as the interaction rule is applied. What is represented in each image is an exact history of each world (up to an arbitrary point). We might refer to these images as bitmap (BMP) images of these worlds, as they contain all there is to know about each world; they are complete descriptions. Whereas a JPEG description would employ an algorithm to compress the images (i.e., extract trends) via some mathematical shorthand, a bitmap image contains complete and perfect information for each and every element of the CA world13.

Fig. 5. Examples of 1-d Cellular Automata World.

There are two very important points to be noted regarding such CA worlds. The first is that changes in the initial distribution of ‘blacks’ and ‘whites’ has little effect whatsoever on the qualitative evolution of the worlds history. The rule of interaction almost completely determines the structure that emerges. Even if the initial conditions were random, the qualitative evolution would be completely unaffected, i.e., the long-term evolution of these CA worlds is quite independent of their starting conditions.14

Secondly, and possibly more importantly, the reader may notice that for a particular world, different groupings, or entities, emerge; entities that contain 10 black pixels in a row, for example. Not that it is easily appreciated from the examples given, but it is possible to extract some sketchy properties for these entities allowing a rough appreciation of how they might interact15. How can this be? How can we assign properties, however rough, to groupings of pixels that are simply the result of the application of a universal rule? In a sense, this means that they have an existence independent of the bitmap description that the world is based upon. Here, we see the beginnings of an ontological shift that is simpler than the bitmap ontology, although not as complete. This really is quite a stupendous leap, and well worth exploring further. It is difficult to visualize the ontological shift (shift in what exists) using 1-d CA worlds, so to help illustrate this point further we next consider John Conway’s Game of Life.

Conway’s game of life

As with CA I am not going to include a full description of the Game of Life. The interested reader is strongly encouraged to refer to William Poundstone’s excellent text,

Fig. 6. Objects in the Life universe.

The Recursive Universe [34] and to explore Paul Callahan’s [6] interactive website16 (though other examples are easily found). For the purposes herein, I will simply regurgitate Dennett’s [14: 37] brief introduction to the Game of Life, or Life.

“Life is played on a two-dimensional grid [as opposed to the 1-d examples already given], such as a checkerboard or a computer screen; it is not a game one plays to win. The grid divides space into square cells, and each cell is either ON or OFF at each moment. Each cell has eight neighbors: the four adjacent cells north, south, east, and west, and the four diagonals: northeast, southeast, southwest, and northwest. Time in the Life world is also discrete, not continuous; it advances in ticks, and the state of the world changes between each tick according to the following rule. Each cell, in order to determine what to do in the next instant, counts how many of its eight neighbors is ON at the present instant. If the answer is exactly two, the cell stays in its present state (ON or OFF) in the next instant. If the answer is exactly three, the cell is ON in the next instant whatever its current state. Under all other conditions the cell is OFF.”17

The entire physics of Life world is captured in that single unexceptional law.

What one finds in exploring the Life world is that some structures emerge that seem to be entities unto themselves. Despite Life being a simple recursive system, these entities seem to maintain themselves and move around the checkerboard in quasi-determinable ways, as well as ‘interact’ with other entities (see, for example, Poundstone, [34, Chapter 2]). Fig. 6 illustrates this to a very limited degree. Along the top of the figure from left to right are four such entities that have been named ‘loaf’, ‘boat’, ‘beehive’, and ‘ship’, respectively. There are many others such as ‘blinkers’, ‘period-2 oscillators’, ‘gliders’, etc. The main image in Fig. 6 is a snapshot in the history of a Life configuration known as ‘Puf Train’ [6]. Now we can get to the heart of the Life matter. Again, in Dennett’s [14: 39] words:

“. should we really say that there is real motion in the Life world, or only apparent motion? The flashing pixels on the computer screen are a paradigm case, after all, of what a psychologist would call apparent motion. Are there really gliders that move, or are there just patterns of cell state that move? And if we opt for the latter, should we say at least that these moving patterns are real?”

Whichever way one chooses to go with the answer to this question, one must bear in mind that Life researchers have discovered rules of interaction for these entities which implies that “their salience as real things is considerable, but not guaranteed” [14]. So even though we can be sure that these entities do not really exist, the fact that they can be treated as having some degree of existence is a staggering breakthrough as it allows us to work with a higher- level (JPEG), albeit approximate, ontology other than the BMP one. These entities, or parts, can be used to construct a high-level system that would be nigh on impossible to do if we were restricted to the BMP domain. In short, Life shows that we can legitimately invoke alternative higher-level quasi-ontologies that are reasonable approximations of the absolutely correct BMP ontology, in which only ON/OFF cells exist. Complex systems are therefore tractable, or compressible, to a degree.

Again in Dennett’s words, [14: 39] what is so incredible with Life is “that there has been a distinct ontological shift as we move between levels; whereas at the physical [BMP] level there is no motion, and only individuals, cells, are defined by their fixed spatial location, at this design level we have the motion of persisting objects.” This observation will be central when we try to paint a picture of a CA Universe in the next section, and justify the legitimacy of not only science but other types of knowledge. Before we move on, I will summarize the discussion thus far presented concerning the ontological status of boundaries from a complex systems perspective by simply saying that there exists a continuum of quasi-boundary stabilities which both facilitates and hinders the development of knowledge of any kind. One other point to remember is that Life is perfectly deterministic; if the game is rerun with the same rules and configuration, exactly the same history will be produced; “[e]verything that happens in Life is predestined” [34: 25]. To be exact, what we should say is that Life is forward-deterministic but not backward-deterministic as “a [particular] configuration has only one future but (usually) many possible pasts” [34: 48].

Exploring the universe as a complex system

Though the difficulties in fully understanding complex systems are considerable, they are not insurmountable, particularly if one can assume that well-defined isolated complex systems actually do exist and can be easily identified. However, I will argue in this section (following on from the previous argument) that no systems actually exist in a strict sense. This observation may seem to be rather trivial, but the methodological differences that arise if the notion of a complex system is problematized are considerable. In this section I will explore the implications of assuming that there is only one true system and that is the Universe itself—an indivisible whole. Though it is impossible to prove in any scientific sense, I begin by making the assumption that the Universe is a CA experiment, in that it comprises an unimaginably large number of non-linearly interacting elements. Why would we want to consider this to be the case? To realize Laplace’s dream of having absolutely Truthful knowledge then, “[t]he state of everything—everywhere—at every time—must be defined. The most economical way to specify such information is through a complexity-generating recursion of physical law” [34: 231] like in Life. According to the latest physical theories these interacting elements might be incredibly minute superstrings that oscillate in 11-dimensional ‘space’, where the oscillation frequency of a string corresponds to a particular fundamental particle, such as a top-quark for example. This view of the Universe is incredibly simple, yet it has the capacity (because of the recursive application of a simple non-linear rule) to account for everything we observe in our view of reality and a lot more besides. It is the capacity of non-linearity to create an infinitude of different structures (and sub-structures) and behaviors that lead to this possibility. Conway, the inventor of Life, “showed that the Life universe...is not fundamentally less rich than our own” [34: 24].

A cellular automata Universe and the status of scientific knowledge

Imagine if you will have a 11-dimensional (a little hard to imagine I know) CA model comprising a vast number of ‘superstring’ automatons whose evolution is determined by a single simple rule; the fundamental Law of Physics. Each step in the Universe’s evolution is simply the result of this rule being applied to each automaton. This view is impossible to prove of course—like other Theories of Everything (ToEs) endeavors it is more ironic science [22: 3] or pseudo-science, than science—but its explanatory powers are surprisingly impressive indeed18. Though the CA perspective cannot be conclusively proved, an exploration of the consequences that lead from this picture are very revealing. These consequences bring us no closer to a proof of the model, but they do demonstrate the utility of this approach; its capacity to provide a common context for many different discourses is quite impressive indeed.

A deterministic Universe

The first observation is that such a Universe would be completely 100% deterministic:

“. in the Life world. there is no noise, no uncertainty, no probability less than one. Moreover, it follows. that nothing is hidden from view. There is no backstage; there are no hidden variables.” Dennett [14: 38].

The entire evolution of the Universe would be totally predetermined by the characteristics of the comprising superstrings and the one (non-linear) rule of interaction. This would please our scientific forefathers’ view of a Universe as a perfectly tuned machine. For such a construction it is quite possible that there is not even any need for particular initial conditions; the initial conditions could be completely random and the consequent Universe would still be the same. (The initial conditions of the Universe have been a hot topic of discussion for sometime. It is only recently that it has been suggested that maybe there are models of the Universe that do not require initial conditions in the traditional sense at all (Chown [8]; and Tegmark [48])—as with the 1-d CA experiments earlier, the initial configuration might be random with qualitatively the same consequences.) This suggested model of the Universe is based upon a radically realist ontology; what truly exists are ‘11-d superstrings’ (or, whatever turns out to be the viable candidate, although scientists may never know), no more, no less. All other objects, entities, ‘boundaries’, whatever, are no more than different combinations of ‘cell states’ that manifest themselves as the ‘loaves’, ‘ships’ and ‘beehives’ of Life. In this Universe a fermion is a type of ‘boat’, say, having no absolute existence, but having a substantial realism, so substantial in fact as to often allow its absolute existence to be taken for granted. An atom is no more than a ‘fleet of ships’ in this Life Universe. Even we humans are not as we appear to ourselves. We are not sentient beings with free will and learning capacities. A human is just a collection of interacting ‘boats’, ‘ships’, ‘beehives’, ‘super-beehives’, i.e., a very intricate ‘cell state’ whose occurrence is inevitable in a CA Universe.

A CA Universe would allow other ontologies to be assumed without having to deal directly with the BMP ontology of an unimaginable number of interacting ‘superstrings’. It is of the utmost importance to acknowledge that such ontological shifts are imperfect; the complexity of a CA Universe is indeed tractable, but at a cost. But, it is this very ability to profit from alternative quasi-ontologies that enables science to function at all. Without this characteristic mathematics could not exist at all; mathematics deals with ‘loaves’ and ‘ships’, not with Life ‘cell states’. Indeed, it is this very point that even allows the existence of a being capable of making such ontological leaps in the first place.

Another fascinating outcome of assuming a CA Universe is that there is no adaptation in any absolute sense of the term. At the BMP level, the ultimate objective reality, ‘superstrings’ do not learn new tricks; they do not become ‘superduperstrings’ (unless it is through an ontological shift on our part). Adaptation is a feature of an ontology that scientists have chosen to take for granted; it is a way of usefully understanding the changes in the Life ‘cell states’ without having to deal directly with those real ‘cell states’. So when complexologists are heard speaking of complex adaptive systems (CASs), they are taking some enormous strides away from what absolutely exists. A great number of assumptions have to be made before one can even infer the (quasi-) existence of CASs. So, even the ontology that complexologists hail as the best lens to view certain ‘parts’ of the Universe through, are kidding themselves to some extent. Even the CAS ontology is a poor to reasonable JPEG approximation of the absolute BMP reality.

And what of causality? Causation as a necessary connection between two events in a CA Universe cannot be inferred from correlation or association in any real sense. Causality, like Life’s ‘boats’ and ‘ships’ is an emergent ‘cell state’ pattern that can only be recognized as such by making an ontological shift away from the BMP view that assumes it’s existence. Causality as experienced by us mere mortals, is an abstraction rather than a real operating process. The psychotherapist Carl Jung wrote (with the cooperation of the eminent physicist Wolfgang Pauli) an interesting treatise on this exact point: what “if the connection between cause and effect turns out to be only statistically valid and only relatively true.”? [25: 5]. Though as Hume has already noted, “causation is a notion fundamental to human cognition, so fundamental that it is unlikely to ever be eradicated” (Wagner [51: 83]), and so it shouldn’t. Whether causation is real or not, it has proven to be a very productive concept.

A theistic observer

In the CA view of the Universe there is no room for free will and choice—that would go against the whole notion of determinism. Consciousness does not exist as such. All decisions, actions, utterances that we each make were all predetermined from the moment of creation (assuming that the idea of a beginning, and therefore time, actually makes any sense in this context)19:. Even the fact that I am sitting in my home office in Norwood, MA at 10:30 in the morning, composing this specific paragraph was inevitable (once the initial conditions had been selected20)21. This all sounds a little absurd, though there are devout religionists who would be quite prepared to believe it. But assuming that the Universe is deterministic, who or what would this apparent exquisite determinism be visible to? Cilliers [10: 4–5] has argued that no thing that exists within a complex system can have complete knowledge of that system. For us, as mere mortals participating within the Universe, we would have to construct a model that represented all 1093 [9], or whatever the colossal number is, of superstrings that made up the Universe and step-through the overall evolution. Now, given that we’re inside the Universe and only have limited resources available to us from within that same Universe, how could we possibly represent the entire Universe with only part of the Universe available to us? Again the simple answer is that we cannot22.

From this simple argument it is clear that, if the Universe is indeed deterministic, this determinism is (thankfully) beyond us; we will never conceive of an experiment that would otherwise persuade us of our own sentience. The Universe’s determinism could only possibly be visible to an external entity; a theistic (meaning outside) being. If you like, this is God. However, such a God would have to have sufficient resources available to model the entire created Universe. To It, the determinism of the Universe would be plainly apparent (assuming the Model ran faster than the Universe itself) like the determinism of the CA experiments in previous sections is plainly apparent to a human observer. Furthermore, given that a model of more degrees of freedom, i.e., greater complexity, than the Universe itself would have a richer set of behaviors than the Universe, this model would have to be the same as the Universe; the Universe itself is its best model. And, assuming that intractability also restricts a theistic being, then It would have to run It’s deterministic universal model before the Universe was created to ensure that it turned out as desired (because there would be no algorithmic shortcut to the future—unless It was happy to rely upon a scrappy JPEG version, which would give unreliable results)23. Of course, some would argue that such an entity would not be limited by such human limitations.

However, we are not totally blind to the Universe’s inherent determinism. The fact that ‘we’ even exist and that ‘we’ have constructed Laws (from the mathematical representation of ‘loaves’ and ‘boats’) that allow us to make quite accurate predictions, accurate enough to build technology, for example, shows that we do indeed obtain glimpses of the clockwork Universe.

Theism versus atheism

From within the Universe it is impossible to have an absolute representation of anything. There is only one true system; all other systems are temporary and contingent structures whose boundaries are, in a strict sense, illusory. In the sense that boundaries are hard resilient objects that demarcate the part from the whole, no boundaries actually exist (except those that define the Universal Cellular Automata). Despite this no-boundary hypothesis, which naturally demands an unachievable radically holist approach to knowledge creation if one wants True knowledge, models that do indeed assume boundaries do have considerable practical use.

Though it is interesting that such a complex systems view does lead to differences in knowing dependent upon a theistic (without) and atheistic (within) position, it is not of much help to us as members (however unreal) of the Universe. However, it is interesting that such a vision of the Universe provides a common context that allows the Universe to be both deterministic and non-deterministic, depending upon the position of an observer. Such a vision has the capacity to allow for the co-existence of apparently opposing positions. However, though it may seem to provide little value when considering the Universe as a whole, what if we could approximate parts of the Universe that appear to be real systems in their own right as complex systems, or even CASs? Again, it is the ability to associate substantial realism to the various Life entities that facilitate (or even allow) this activity. Our own existence as such can only be realized by making a shift from The Universal Ontology of Life to an irrealist (albeit substantially real) ontology; human existence is an arbitrary paradigm rather than a given absolute fact.

The complex systems view differentiates between the knowledge one can obtain when we regard ourselves as outside a (particular) system (of ‘loaves’) and that knowledge we can obtain when we regard ourselves as a member of the system. This view demonstrates that the subject–object distinction often made does limit the knowledge we can have. How much can an outside consultant know about a particular organization and how valuable is his/her knowledge? What is more important, the opinions of members of society, or the view of politicians often seen as disengaged from society? How much can an earthbound science know about the Universe in which it is supported? We will not investigate this aspect of complexity much further but, it is interesting to note that complexity thinking does legitimize subjective knowledge as well as objective knowledge, albeit in an imperfect way. We need to ask ourselves: if science claims to extract real patterns (which from the discussion on Life has shown not to be the case—science considers ‘loaves’ and ‘blinkers’ that do not ultimately exist) to what extent are those patterns more real than the patterns we each extract from our surroundings in the process of sense-making. Are our personal opinions based upon patterns less real than those found in science? Why should science be allowed to claim that the objects it considers are more real than the objects we each ‘see’ in our daily lives? In what sense are the boundaries of an ‘electron’ more real than my own personal boundary that defines ‘friend’ given that neither is absolutely real? (Often, the ability to make accurate predictions is the only differentiating factor, which is quite unreasonable given the different nature of these entities.)

Boundary (or, pattern) distributions

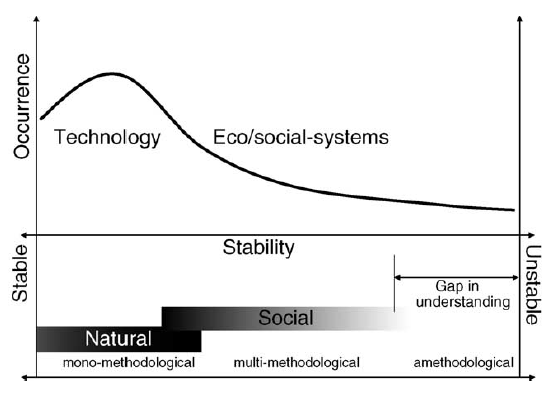

The basic conclusion that the complexity-based argument given thus far leads to is that there are no boundaries in the Universe except those that define its fundamental components (superstrings?). How are we to do derive knowledge of particular systems then (particularly if no systems really exist)? As mentioned above the situation is not as dire as it might immediately seem. There is no need to follow the radical holists to model the world, the Universe and everything (which we could not do even if we wanted to). In the field of complexity there is strong evidence that, though there may be no boundaries, there are resilient and relatively stable emergent structures, or patterns, that can be treated with a reasonable degree of accuracy as having limited existence. In fact, there is a distribution of boundary, or entity, stabilities. No evidence is given herein for what this distribution may actually be; it is simply argued that there is a distribution. Fig. 7 illustrates a possible stability distribution (which has no theoretical or empirical basis).

Fig. 7. A possible distribution of natural quasi-boundary (pattern) stabilities.

At one end of the stability spectrum there are boundaries/structures that are so persistent and stable that, for most intents and purposes, it can safely be assumed that they are in fact real and absolute. Boundaries that describe the objects of science-based technology exist toward this end of the spectrum. Such long-term stability allows a ‘community of enquirers’, e.g., the scientific community, to inter-subjectively converge on some agreed principles that might actually be tested through experiment. Under such conditions it is quite possible to develop quasi-objective knowledge, which for most intents and purposes (but not ultimately) is absolute. The existence of such persistent boundaries, or patterns, allows for something other than a radically holistic analysis—this explains why the scientific program has been in many ways so successful when it comes to technological matters—it has hit upon a very powerful quasi-ontology. In many circumstances reductionism (the assumption that ‘beehives’ actually do exist, and act as the ‘parts’ for more complex ‘wholes’) is a perfectly valid, though still approximate, route to understanding. In short, what is suggested here is that scientific study depends upon the assumption that natural boundaries are static in a sense, and that if one can ‘prove’ that the boundaries of interest are in fact stable and persistent, then scientific method is more than adequate.

It is exactly this stability, this apparent ‘movement’ of persistently stable entities (as is observed in Life), that can be attributed some substantial level of realism that allows us as modelers/scientists/observers to “proceed to predict—sketchily and riskily—the behavior of larger configurations or systems of configurations, without bothering to compute the physical [BMP] level” [14: 40]; an enormous computational saving indeed. It is exactly this substantial realism of levels, or quasi-entities, that supports the efficacy of the hierarchy of sciences without having to know everything there is to know about each ascending level away from the fundamental physical superstring reality (in an absolute sense).

At the other end of the stability spectrum we have essentially noise, in which the lifetime of apparent boundaries might be so fleeting as to render them unrecognizable as such and therefore unanalyzable. Under such circumstances attempts to develop knowledge are strongly determined by the whims of the individual, with observed boundaries being more a function of our thirst to make sense, rather than an actual feature of reality. To maintain a purely positivistic position, one would have to accept radical holism and consider the entire Universe—a practical absurdity and a theoretical impossibility, as has already been stated. This is the only method by which truly robust knowledge could possibly be derived.