Complexity science as order-creation science: New theory, new method

Bill McKelvey

UCLA Anderson School of Management, USA

Abstract

Traditional ‘normal’ science has long been defined by classical physics and most obviously carried over into social science by neoclassical economics. Especially because of the increasingly rapid change dynamics at the dawn of the 21st century, different kinds of foundational assumptions are needed for an effective scientific epistemology. Complexity science - really ‘order-creation science’ - is particularly relevant because it is founded on theories explicitly aimed at explaining order creation rather than accounting for classical physicists’ traditional concerns about explaining equilibrium. This article sets up the rapid change problem, and shows why evolutionary theory is not the best approach for explaining entrepreneurship and organizational change dynamics. New theories from order-creation science are briefly presented. The continuing centrality of models in scientific realist definitions of modern science is brought to center stage. Agent-based computational models are shown to be better than math models in playing the role of forcing theoretical elegance and continuing the essential experimental tradition of effective science.

Introduction

Isabelle Stengers, (2004) reminds us that the founding idea of complexity science was Prigogine’s juxta positioning of the 1st and 2nd Laws of Thermodynamics so as to explain the emergence of dissipative structures. Implicit in this was his questioning of the reversibility of time and the centrality of equilibrium in “normal” science (Prigogine & Stengers, 1984). There can be no greater foundational challenge to normal science, the origin of which was classical physics. Sandra Mitchell, (2004) reminds us of the centrality of idealized, abstract models, one of the enduring legacies of logical positivism (McKelvey, 2002). Ironically, if mathematics is taken as the model-technology of choice, these two foundational statements can’t be joined. Why? Math as the core modeling method of modern science originated in Newton’s studies of orbital mechanics and was greatly reinforced by the Vienna Circle’s founding of logical positivism in 1907 (Suppe, 1977). Given that the 1st Law is about conservation of energy, and that classical physical dynamics is about the translation of matter from one form to another, math became the only means of rigorously accounting for the accuracy of the translation, and thereby the proving of theories about what causes what, given equilibrium. Simply put, math models can’t handle order creation. The methodological invention that does allow the joining of the two foregoing foundational statements is the agent-based1 computational model. Casti, (1997) states that in fifty years time computational experiments will be seen as the primary contribution of the Santa Fe Institute.

The tendency in organization studies so far is to focus on explicating the term complexity, seemingly in every way possible, and to rush toward offering practical wisdom, again, seemingly in every way possible. Could it be that the science of it all is being ignored in the rush toward practical application? My intent is to emphasize the science part in two ways. First, calling ‘it’ complexity science is like calling thermodynamics ‘hot’ science. Complexity and hot are the outcomes at one end of the dynamic scale. More aptly, complexity science is order-creation science. This puts focus on the fundamental change in the nature of the dynamics involved - from equilibrium dynamics to order-creation dynamics. Second, there is the changing role ofmodels. Math is good for equilibrium modeling. Agent-based computational models are essential for modeling order creation.

My objective is to explain why order-creation science offers significant new lessons for how scholars doing management and organizational studies can better understand the modern dynamics of their phenomena. I begin with a review of why ‘New Age’ economies and organizations in the digital information era call for organizational designs in which the collective intelligences of many employees may be brought to bear, quickly, on New Age organization problems and strategies. I then discuss why order-creation science offers better ways of understanding and researching emergent collective phenomena. Foremost among these new methods is the use of agent-based computational models. I also outline the epistemological reasons why models remain a cornerstone of effective science. A short overview of how one might use agent models in conducting one’s research on organizations and managerial processes follows.

Organizational dynamics in the 21st century

'New age' economics and increased external complexity

Over past centuries, economic life has been marked by three revolutions: agricultural, industrial, and service. The 21st century brings with it a fourth - the digital information age. Nowhere are the dynamics characterizing the knowledge era more vividly and succinctly portrayed than in a recent book edited by Halal and Taylor (1999), 21st Century Economics: Perspectives of Socioeconomics for a Changing World. Their conclusions (paraphrased) are far reaching (pp. 398-402):

- Economies of the 21st century will be dominated by globalization and integrated by sophisticated information networks;

- Increasingly deregulated economies will mirror the textbook ideal of perfect competition (and marginal profits);

- Creative destruction from the transition will create social disorder worldwide;

- Nearly autonomous entrepreneurial cellular networks and fundamentally different ways of corporate governance will replace top-down hierarchical control.

Part Two of their book, titled “Emerging Models of the Firm,” focuses on the magnitude of the problem managers face as they cope with increasing competitiveness world-wide while at the same time trying to shift from top-down control to the management of complex new organizational forms using radically new approaches of managerial leadership for purposes of knowledge creation and the creation of intra-organizational market dynamics. As if this weren’t difficult enough, Larry Prusak (1996: 6) points to speed as the driving element2:

“The only thing that gives an organization a competitive edge - the only thing that is sustainable - is what it knows, how it uses what it knows, and how fast it can know something new!”

Halal and Taylor say life will be different on the other side of the millennium - the New Age:

“Communism has collapsed, new corporate structures are emerging constantly, government is being ‘reinvented’, entirely new industries are being born, and the world is unifying into a global market governed by the imperatives of knowledge” (1999: xvii)

The four conclusions by Halal and Taylor, mentioned above, predict economic revolution over the next two decades. Given this, what should organization theorists and managers worry about? Significant clues come from Part II of their book, boiled down in their Table 1, which focuses on “Emerging Models of the Firm.” Abstracting from this Table, what do the various authors in Part II see going on?

- New technology, dynamic markets, accelerating change, shorter product life cycles, digital information revolution, decentralization, globalization, environmental decay;

- Corporate restructuring focusing on responding to hypergrowth, building cellular networks, product-cycle management, use of internal markets;

- Disequilibria rather than optimization, with efficiency and enhanced competitiveness stemming from employee empowerment.

The authors in Part II emphasize decentralization, cellular networks, internal markets, and employee empowerment as defining elements of New Age economies - all in response to disequilibria and new economic trends. Key questions we face are: How should we research organizational and/or managerial dynamics? How should managers manage? (Drucker,

1999)

Also from Halal and Taylor (1999) we learn that New Age trends call for dramatically new organizational strategies and designs. Strategy scholars have seen this coming. Recent writers about competitive strategy and sustained rent generation parallel Prusak’s emphasis on how fast a firm can develop new knowledge. Competitive advantage is seen to stem from keeping pace with high-velocity environments (Eisenhardt, 1989), seeing industry trends (Hamel & Prahalad, 1994) and value migration (Slywotzky, 1996), and staying ahead of the efficiency curve (Porter, 1996). Because of increases in the need for dynamic capabilities, faster learning, and knowledge creation there is an increased level of causal ambiguity (Lippman & Rumelt, 1982; Mosakowski, 1997). Learning and innovation are not only more essential (Ambrose, 1995), but also more difficult (Auerswald, et al., 1996; ogilvie, 1998). Dynamic ill-structured environments and learning opportunities become the basis of competitive advantage if firms can be early in their industry to unravel the evolving conditions (Stacey, 1995). Drawing on Weick, (1985), Udwadia, (1990), and Anthony, et al. (1993), ogilvie (1998: 12) argues that strategic advantage lies in developing new useful knowledge from the continuous stream of “unstructured, diverse, random, and contradictory data” swirling around firms.

Matching internal to external complexity via bottom-up emergence

The foregoing trends appear on a CEO’s horizon as uncertainties. Uncertainty in organizational environments is a function of (1) degrees of freedom (generally taken as the most basic definition of complexity - GellMann, 1994); (2) the possible nonlinearity of each variable comprising each degree of freedom, and (3) the possibility that each may change. These three environmental ingredients give rise to seemingly countless strategic options. Long ago, and seemingly in simpler times, Ashby characterized environments and possible adaptive options using the term “variety.” His classic Law of Requisite Variety (1956: 207) holds that:

“only variety can destroy variety”

What does Ashby mean by “destroy”? His insight was that a system has to have internal variety, also defined as degrees of freedom, that matches its external variety so that it can self-organize to deal with and thereby “destroy” or overcome the negative effects on adaptation of imposing environmental constraints and complexity. In biology, this is to say that a species has to have enough internal genetic variance to successfully adapt to whatever resource and competitor tensions imposed by its environment. I update and extend Ashby’s Law as follows:

- Only internal variety can destroy external variety updates to:

- Only internal degrees of freedom can destroy external degrees of freedom - updates to:

- Only internal complexity can destroy external complexity - updates to:

- Only interactive heterogeneous agents can destroy external complexity - updates to:

- Only distributed intelligence can destroy external complexity.

But how is external complexity destroyed and internal complexity created? Thompson, (1967), reflecting the era of contingency theory, took the view that variety was reduced from the top. Thus, at each level starting with the CEO, some variety is taken out of the system so that at the bottom, workers do their jobs in a machine-like setting of total certainty. This is a top-down approach to uncertainty reduction. Nearly a quarter century later, Mélèse (1991) takes the opposite view, arguing that variety reduction happens best from the bottom up. Simon, (1999) observes that it is not just variety that is out there to be destroyed but also high change-rate effects. Lower level units, therefore, must absorb variety, leaving upper managers with less frequent, less noisy, less complex, but weightier decisions. By way of expanding on how organizations might go about developing internal complexity, I briefly describe four approaches to the bottom-up variety destruction problem.

Knowledge creation3. Knowledge theory explores strategies for effectively utilizing worker intelligence (Grant, 1996). According to current literature, the wellsprings of knowledge (Leonard-Barton, 1995) derive from the connected intelligences of individuals (Nonaka & Takeuchi, 1995; Nonaka & Nishiguchi, 2001; Stacey, 2001). As firms increasingly depend upon individual intelligences in their production enterprise (Burton-Jones, 1999; Davenport & Prusak, 1998; Gryskiewicz, 1999), they must develop strategies for acquiring, interpreting, distributing, and storing the information that individuals possess (Huber, 1996). Nonaka and Nishiguchi (2001) describe this knowledge management process as the expansion of “individual knowledge” into higher-level, “organizational knowledge.”

In social systems, the learning dynamics described above occur simultaneously and interactively - it is a connectionist social capital development problem (Burt, 1992). Transactive memory (Moreland & Myaskovsky, 2000; Wegner, 1987) and situated learning studies (Glynn, et al., 1994; Lave & Wenger, 1991), for example, all show learning as a nonlinear, interactive, and coevolving (Lewin & Volberda, 1999) process. Even individual cognitive processes are seen as socially distributed (Taylor, 1999), with employees in social networks influencing and learning from each other (Argote, 1999). Learning, thus, is a recursive connectionist process rather than a linear agent-independence one.

Recent work now stresses the importance of the “collective mind” (Lave & Wenger, 1991; Weick & Roberts, 1993). According to Glynn, et al. (1994), learning is “best modeled in terms of the organizational connections that constitute a learning network” (p. 56). Wenger, (1998) focuses on individual learning in “communities of practice,” observing that individual learning is inseparable from collective learning. Lant and Phelps (1999: 233) hold that learning should be understood primarily as evolving within “an interactive context... embedded in the context and the process of organizing.” McKelvey (2001a, 2005) refers to recursive vertical and horizontal individual / group learning processes in organizations as “distributed intelligence.”

Distributed Intelligence. Henry Ford’s quote represents thinking in the Industrial Age:

“Why is it that whenever I askfor a pair ofhands, a brain comes attached?” (Ford)4

“My work is in a building that houses three thousand people who are essentially the individual ’particles’ of the ‘brain’ of an organization that consists of sixty thousand people worldwide.” (Zohar, 1997: xv)

Zohar, (1997) starts her book by quoting the director of retailing giant, Marks & Spencer. Each particle (employee) has some intellectual capability - Becker’s (1975) human capital. And some of them talk to each other - Burt’s (1992) social capital. Together they comprise distributed intelligence. Human capital is a property of individual employees. Taken to the extreme, even geniuses offer a firm only minimal adaptive capability if they are isolated from everyone else. A firm’s core competencies, dynamic capabilities, and knowledge requisite for competitive advantage increasingly appear as networks of human capital holders. These knowledge networks also increasingly appear throughout firms rather than being narrowly confined to upper management (Norling, 1996). Employees are now responsible for adaptive capability rather than just being bodies to carry out orders. Here is where networks become critical. Much of the effectiveness and economic value of human capital held by individuals has been shown to be subject to the nature of the social networks in which the human agents are embedded (Granovetter, 1973, 1985, 1992).

Intelligence in brains rests entirely on the production of emergent networks among neurons “intelligence is the network” (Fuster, 1995: 11). Neurons behave as simple “threshold gates” that have one behavioral option - fire or not fire (p. 29). As intelligence increases, it is represented in the brain as emergent connections (synaptic links) among neurons. Human intelligence is ‘distributed’ across really dumb agents! In computer parallel processing systems, computers play the role ofneurons. These systems are more ‘nodebased’ than ‘network-based’. Artificial intelligence resides in the intelligence capability of the computers as agents, with emergent network-based intelligence still at a very primitive stage (Garzon, 1995). My focus on distributed intelligence places most of the emphasis on the emergence of constructive networks. The lesson from brains and computers is that organizational intelligence or learning capability is best seen as ‘distributed’ and that increasing it depends on fostering network development along with increasing agents’ human capital.

Cellular networks: Miles, et al. (1999) offer a second approach to variety destruction. They refer to the 21st century as the era of innovation. They see self-organizing employee learning networks as essential to effective performance in the knowledge economy. It takes continuously evolving networks to keep up with rapidly evolving elements of the knowledge economy, particularly technology, market tastes, and industry competitors. Miles, et al. (1999) see entrepreneurship, self-organization, and member ownership as the essential ingredients of effective cellular networks. Cells consist of self-managing teams of employees - the heterogeneous agents governed by what complexity scientists term ‘simple rules’. The cells have “...an entrepreneurial responsibility to the larger organization” (p. 163). Miles, et al. (1999) say that if the cells are strategic business units they may be set up as profit centers. They emphasize the instability of the cells, noting that each cell must reorganize constantly. It needs appropriate governance skills to do this.

For Miles, et al. (1999), the CEO’s approach to managing the cells is based on viewing the cells as entrepreneurial firms. They offer two examples. In one firm (Technical and Computer Graphics), the cells / firms are j oint venture partners within the enveloping organization. In the other (The Acer Group), the cells / firms are j ointly owned via an internal stock market. Details remain vague as to how the cells maintain their autonomy in the face of top-down control, or how the CEOs assure shareholder value from the cells. Miles, et al. (1999) talk about self-organization, learning networks, and emergent cells, but, again, how all this works is vague.

Managing appropriate network autonomy. In a recent paper Thomas, et al. (2005) remind us that Roeth-lisberger and Dixon discovered the classic control-autonomy duality (formal vs. informal organization) back in 1939. By now, several dualities have been observed: control vs. autonomy, innovation, variety, and self-organization, and change rate, among others. Thomas, et al.’s (2005) review shows that the ‘English’ literature tends to persist in looking for bipolar duality solutions in which the opposing forces are balanced or adjusted to achieve an “optimum mix” (March, 1999: 5). In contrast, they observe that the ‘French’ literature holds that control and autonomy - and other dualities are “entangled.” Even though control might dominate (the “englobing” force), for example, circumstances are recognized when autonomy can dominate ( “inversion”). Further, the French see the rate of “inversion / reversion” between which pole of the duality dominates as unstable. The tangled poles - really forces - of dualities have to be appropriately “managed” if a CEO wishes to create and maintain the “combination of independence and interdependence” characterizing Miles, et al.’s “cellular network” design. Some 60 years of organizational research shows this is much easier said than done!

Based on a twelve-year analysis of a global cosmetics firm, Thomas, et al. (2005) conclude that:

- Any attempt to focus only on the autonomy end of a duality likely will fail.

- Effective “leading” of a cellular network requires setting in motion the dynamic inversion / reversion of control and autonomy, such that they are “entangled” as opposed to “balanced” or “optimized” (March, 1991, 1999). Further, they evolve in their interactive dynamics over time.

- The ‘rate’ at which the bipoles irregularly oscillate is critical. The zero-oscillation periods, whether autonomy or control dominated, did not resolve the overproduction and no-profit situations.

- The control-pole dominating (englobing), but with frequent reversions to autonomy-dominance, appears as the most successful organizational form, since it is both stable and produces profits.

In the foregoing section I have built from Ashby’s classic Law of Requisite Variety toward the idea that adaptive capability in human organizations, under conditions of New Age external complexity, stems from distributed intelligence built up and held collectively by heterogeneous agents (employees). It is not just the one brain at the top that counts - it is lots of connected brains. This points to the critical importance of bottom-up emergent dynamics underlying the creation of learning and new knowledge, and distributed intelligence in organizations and cellular networks. Given this, I now turn to the question: Where do we draw lessons for better understanding methods for creating newly emerging structures? The best place right now is from complexity science - which is really the science of new order creation.

New theory: Order-creation (complexity) science

Theoretical background

All of the foregoing calls for bottom-up emergence call for a science of new order creation (McKelvey, 2004b). Much of the scientific method in management and organizations and in business schools, especially strategy studies, is dominated by the epistemology of economics (e.g., Besanko, et al., 2000. In fact, social science in general is dominated by economics. Unfortunately, economics is notorious for drawing its epistemology from classical physics and the latter’s focus on equilibrium dynamics and the mathematical accounting of physical matter transformations governed by the 1st Law of Thermodynamics (Mirowski, 1989, 1994; Ormerod, 1994, 1998; Arthur, et al., 1997, Colander, 2000). How to get from equilibrium-based to order-creation science?

Nelson and Winter (1982) look to Darwinian evolutionary theory for a dynamic perspective useful for explaining the origin of order in economic systems; so too, does Aldrich (1979, 1999). Leading writers about biology, such as Salthe, (1993), Rosenberg, (1994), Depew, (1998), Weber, (1998), and Kauffman, (2000), now argue that Darwinian theory is, itself, equilibrium bound and not adequate for explaining the origin of order. Underlying this change in perspective is a shift to the study of how heterogeneous bio-agents create order in the context of geological and atmospheric dynamics (McKelvey, 2004a).

Campbell brought Darwinian selectionist theory into social science (Campbell, 1965; McKelvey & Baum, 1999). Nelson and Winter (1982) offer the most comprehensive treatment in economics; Aldrich (1979) and McKelvey, (1982) do so in organization studies. The essentials are: (1) Genes replicate with error; (2) Variants are differentially selected, altering gene frequencies in populations; (3) Populations have differential survival rates, given existing niches; (4) Coevolution of niche emergence and genetic variance; and (5) Struggle for existence. Economic Orthodoxy develops the mathematics of thermodynamics to study the resolution of supply / demand imbalances within a broader equilibrium context. It also takes a static, instantaneous conception of maximization and equilibrium. Nelson and Winter introduce Darwinian selection as a dynamic process over time, substituting routines for genes, search for mutation, and selection via economic competition.

Rosenberg, (1994) observes that Nelson and Winter’s book fails because Orthodoxy still holds to energy conservation mathematics (the 1st Law of Thermodynamics), the prediction advantages of thermodynamic equilibrium, and the latter framework’s roots in the axioms of Newton’s orbital mechanics, as Mirowski, (1989) discusses at considerable length. Hinterberger, (1994) critiques Orthodoxy’s reliance on the equilibrium assumption from a different perspective. In his view, a closer look at both competitive contexts and socioeconomic actors uncovers four forces working to disallow the equilibrium assumption:

- Rapid changes in the competitive context of firms do not allow the kinds of extended equilibria seen in biology and classical physics;

- There is more and more evidence that the future is best characterized by “disorder, instability, diversity, disequilibrium, and nonlinearity” (p. 37);

- Firms are likely to experience changing basins of attraction - that is, the effects of different equilibria;

- Agents coevolve to create higher level structures that become the selection contexts for subsequent agent behaviors.

Hinterberger’s critique comes from the perspective of complexity science. Also from this view, Holland (1988: 117-124) and Arthur, et al., 1997: 3-4) note that the following characteristics of economies counter the equilibrium assumption essential to predictive mathematics:

- Dispersed interaction: dispersed, possibly heterogeneous, agents active in parallel;

- No global controller or cause: coevolution of agent interactions;

- Many levels of organization: agents at lower levels create contexts at higher levels;

- Continual adaptation: agents revise their adaptive behavior continually;

- Perpetual novelty: by changing in ways that allow them to depend on new resources, agents coevolve with resource changes to occupy new habitats; and

- Out-of-equilibrium dynamics: economies operate ‘far from equilibrium’, meaning that economies are induced by the pressure of trade imbalances individual-to-individual, firm-to-firm, country-to-country, etc.

After reviewing all the chapters in their anthology, The Economy as an Evolving Complex System, most of which rely on mathematical modeling, the editors ask, “in what way do equilibrium calculations provide insight into emergence?” (Arthur, et al., 1997: 12) The answer is, of course, they don’t. What is missing? Holland’s elements of complex adaptive systems are what are missing: agents, nonlinearities, hierarchy, coevolution, far-from-equilibrium, and self-organization.

This becomes evident once we use research methods allowing a fast-motion view of socioeconomic phenomena. The fast-paced technology and market changes in the modern knowledge economy - that drive knowledge creation and entrepreneurship - suggest such an analytical time shift for socioeconomic research methods is long over due. The methods of economics are based on the methods of physics, which in turn are based on very slow motion new order-creation events, i.e., planetary orbits and atomic processes that have remained essentially unchanged for billions of years.

Bar-Yam (1997) divides degrees of freedom into fast, slow, and dynamic time scales. On a human time scale, applications of thermodynamics to the phenomena of classical physics and economics assume that slow processes are fixed and fast processes are in equilibrium, leaving thermodynamic processes as dynamic. Bar-Yam says, “Slow processes establish the [broader] framework in which thermodynamics can be applied” (1997: 90). Now, suppose we speed up slow motion physical processes so that they appear dynamic at the human time scale - say to a rate of roughly one year for every three seconds. Then about a billion years go by per century. It is like looking at a 3.8 billion year movie in fast-motion. At this speed we see the dynamic effects geological changes have on biological order - the processes of Darwinian evolution go by so fast they appear in equilibrium (elaborated in McKelvey, 2004a)!

If the classical physics, equilibrium-influenced methods of socioeconomic research are viewed through the lens of fast-motion science, evolutionary analysis shifts into Bar-Yam’s fast motion degrees of freedom. Thus, changes attributed to selection “dynamics” slip into equilibrium. By this logic, since evolutionary analysis is equilibrium-bound, it is ill suited for research focusing on far-from-equilibrium change. Following Van de Vijver, et al. (1998), dynamic analysis, therefore, must focus on agents’ self-organization rather than Darwinian selection.

The 1st Law of Thermodynamics has been the defining dynamic of science - but it focuses on order translation, not order-creation. Elsewhere, I review the complexity scientists’ search for the 0th law of thermodynamics, focusing on the root question in complexity science: What causes order before 1st Law equilibria take hold? (McKelvey, 2004a). How and when does order creation occur? Post-equilibrium science studies only time-reversible, post 1st Law energy translations - how, why, and at what rate energy translates from one kind of order to another (Prigogine, 1955, 1997). It invariably assumes equilibrium. Pre-equilibrium science focuses on the order-creation characteristics of complex adaptive systems. Knowledge Era research needs to be based on pre-equilibrium science!

Two implications follow from the foregoing review: (1) If not equilibrium-based science, we need to find another scientific approach that focuses on order creation instead of equilibrium. This is what complexity science does; and (2) We also need a different kind of modeling approach. I will point out later just how essential formal modeling is to good science. It also turns out that the American School of complexity science has developed a New Age modeling approach agent-based computational models. But first, new theory.

Schools of complexity science: Two new theory bases

The complexity science view of the origin of order in biology is that self-organization - pre 1st Law processes - explains more order in the biosphere than Darwinian selection (Kauffman, 1993, 2000; Salthe, 1993; the many authors in Van de Vijver, et al., 1998). Two independently conceived engines of order creation are apparent in complexity science. I review them briefly below and conclude with a call for their integration.

The European group consists of Prigogine (1955, 1997), Haken (1977/1983), Cramer, (1993), Mainzer (1994/2004), among others. The American group consists largely of those associated with the Santa Fe Institute. While one could gloss over the differences, I think it is worth not doing so5. For the Europeans, it is clear that phase transitions, especially at the 1st critical value, are fundamental. Phase transitions are significant events that occur at the 1st critical value of R, the Reynolds number (from fluid flow dynamics, Lagerstrom, 1996). Phase transitions are, thus, dramatic events, far removed from the instigation events the Americans focus on, which are: (1) the almost meaningless random “butterfly” effects that set off “self-organized criticality” and complexity cascades (Gleick, 1987; Bak, 1996; Brunk, 2000), and (2) the kinds of events or ‘things’ that initiate positive feedback mutual causal processes - what Holland, (1995) calls “tags.” Though their differences are significant, both are essential to social science. To make the differences really obvious, I boil them down to bare essentials6.

European school. The Europeans emphasize the following key elements:

- Physical phenomena;

- Phase transitions resulting from external “force-based” (energy-based) instigation effects;

- Independent data points - atoms, molecules, etc.;

- Math intensive;

The region of emergent complexity defined by the 1st and 2nd critical values.

They typically begin with Bénard cells. In a Bénard process (1901), ‘critical values’ in the energy differential (measured as temperature, AT) between warmer and cooler surfaces of the container affect the velocity, R, of the air flow, which correlates with AT. Suppose the surfaces of the container represent the hot surface of the earth and the cold upper atmosphere. The critical values divide the velocity of air flow in the container into three kinds:

- Below the 1st critical value (the Rayleigh number), heat transfer occurs via conduction - gas molecules transfer energy by vibrating more vigorously against each other while remaining essentially in the same place;

- Between the 1st and 2nd critical values, heat transfer occurs via a bulk movement of air in which the gas molecules move between the surfaces in a circulatory pattern - the emergent Bénard cells. We encounter these in aircraft as up-and downdrafts; and

- Above the 2nd critical value a transition to chaotically moving gas molecules is observed.

| Newtonian complexity exists when the amount of information necessary to describe the system is less complex than the system itself. Thus a rule, such as F = ma = md2s/dt2 is much simpler in information terms than trying to describe the myriad states, velocities, and acceleration rates pursuant to understanding the force of a falling object. “Systems exhibiting subcritical [Newtonian] complexity are strictly deterministic and allow for exact prediction” (Cramer, 1993: 213) They are also “reversible” (allowing retrodiction as well as prediction thus making the “arrow of time” irrelevant (Eddington, 1930), Prigogine & Stengers, 1984). |

| At the opposite extreme is stochastic complexity where the description of a system is as complex as the system itself - the minimum number of information bits necessary to describe the states is equal to the complexity of the system. Cramer lumps chaotic and stochastic systems into this category, although deterministic chaos is recognized as fundamentally different from stochastic complexity (Morrison, 1991), since the former is ‘simple rule’ driven, and stochastic systems are random, though varying in their stochasticity. Thus, three kinds of stochastic complexity are recognized: purely random, probabilistic, and deterministic chaos. For this essay I narrow stochastic complexity to deterministic chaos, at the risk of oversimplification. |

| In between, Cramer puts emergent complexity. The defining aspect of this category is the possibility of emergent simple deterministic structures fitting Newtonian complexity criteria, even though the underlying phenomena remain in the stochastically complex category. It is here that natural forces ease the investigator’s problem by offering intervening objects as ‘simplicity targets’ the behavior of which lends itself to simple rule explanation. Cramer (1993: 215-217) has a long table categorizing all kinds of phenomena according to his scheme. |

Table 1 Definitions of Kinds of Complexity by Cramer, (1993). For mnemonic purposes I use ‘Newtonian’ instead of Cramer’s ‘ subcritical’, ‘stochastic’ instead of ’fundamental’, and ’emergent’ instead of ‘critical’ complexity.

Since Bénard, (1901), fluid dynamicists (Lagerstrom, 1996) have focused on the 1st critical value, Rc1 the Rayleigh number - that separates laminar from turbulent flows. Below the 1st critical value, viscous damping dominates so self-organized emergent (new) order does not occur; above the Rayleigh number inertial fluid motion dynamics occur (Wolfram, 2002: 996). Ashby, in his book, Design for a Brain (1960), described functions that, after a certain critical value is reached, jump into a new family of differential equations, or as Prigogine would put it, jump from one family of “Newtonian” linear differential equations describing a dissipative structure to another family. Lorenz, (1963), followed by complexity scientists, added a second critical value, Rc2. This one separates the region of emergent complexity from deterministic chaos - the so-called “edge of chaos.” Together, the 1st and 2nd critical values define three kinds of complexity (Cramer, 1993; see Table 1):

Newtonian → |Rc1| → Emergent → |Rc2| → Chaotic

Elsewhere, I have reviewed a number of theories about causes of emergent order in physics and biology, some of which have been extended into the econosphere (McKelvey, 2001c, 2004a). Kelso7, Ding and Schöner (1992) offer the best synthesis of the European school:

“Control parameters, Ri, externally influenced, create R > Rc1 with the result that a phase transition (instability) approaches, degrees of freedom are enslaved, and order parameters appear, resulting in similar patterns of order emerging even though underlying generative mechanisms show high variance.”

Equilibrium thinking and the 1st Law are endemic in evolutionary theory applications to economics and organization science. Equilibrium thinking, central tendencies, and the use of energy dynamics in independent variables to predict outcome variables is also endemic to organization science empirical methods, whether regression or econometric analyses. However, there now is a shift from the homogeneous agents of physics and mathematics to heterogeneous, self-organizing agents. As Durlauf (1997: 33) says, “A key import of the rise of new classical economics has been to change the primitive constituents of aggregate economic models: while Keynesian models employed aggregate structural relationship s as primitives, in new classical models individual agents are the primitives so that all aggregate relationships are emergent.” In this statement the 0th law is brought in more directly.

The application of the 0th law in socioeconomics rests with Haken’s control parameters, the first two words in the Kelso, Ding and Schöner statement. The R. adaptive tensions (McKelvey, 2004a, 2005) can appear in many different forms, from Jack Welch’s famous phrase, “Be #1 or 2 in your industry in market share or you will be fixed, sold, or closed” (Tichy & Sherman, 1994: 108; somewhat paraphrased), to narrower tension statements aimed at technology, market, cost, or other adaptive tensions. Schumpeter, (1942) long ago observed8 that entrepreneurs are particularly apt at uncovering tensions in the marketplace. The applied implication of the 0th law is that new order creation is a function of (1) control parameters, (2) adaptive tension, and (3) phase transitions motivating (4) agents’ self-organization. Take away any of these and order creation stops.

American school. The Americans emphasize the following:

- Life and social phenomena;

- New order resulting from nonlinear positive feedback effects (Young, 1928; Maruyama, 1963; Arthur, 1988) coupled with small initiating events (Holland’s, 1995, “tags”);

- Interdependent, connected, interactive data points, i.e., biological ‘agents’, though physical ones also may be included;

- Agent-based computational models;

- Fractals, scalability, power laws, and scale-free theory (Brock, 2000).

The American complexity literature focuses on positive feedback, power laws, and small instigating effects. Gleick, (1987) details chaos theory, its focus on the so-called butterfly effect (the fabled story of a butterfly flapping its wings in Brazil causing a storm in North America), and aperiodic behavior ever since the founding paper by Lorenz, (1963). Bak (1996) reports on his discovery of self-organized criticality - a power law event - in which small initial events can lead to complexity cascades of avalanche proportions. Arthur (1990, 2000) focuses on positive feedbacks stemming from initially small instigation events. Casti, (1994) and Brock, (2000) continue the emphasis on power laws. The rest of the Santa Fe story is told in Lewin (1992/1999). In their vision, positive feedback is the ‘engine’ of complex system adaptation. American complexity scientists tend to focus on Rc2 - the edge of chaos (Lewin, 1992; Kauffman, 1993, 2000; Brown & Eisenhardt, 1998), which defines the upper bound of the region of emergent complexity. What happens at Rc1 is better understood; what happens at Rc2 is more obscure. The ‘edge of chaos’, long a Santa Fe reference point (Lewin, 1992), is now in disrepute, however (Horgan, 1996: 197).

In a truly classic paper, Maruyama, (1963) discusses mutual causal processes mostly with respect to biological coevolution. He distinguishes between the “deviation-counteracting” negative feedback most familiar to general systems theorists (Buckley, 1967) and “deviation-amplifying” positive feedback processes (Milsum, 1968). Boulding, (1968) and Arthur (1990, 2000) focus on ‘positive feedbacks’ in economies. Negative feedback control systems such as thermostats are most familiar to us. Positive feedback effects emerge when a microphone is placed near a speaker, resulting in a high-pitched squeal. Mutual causal or coevolutionary processes are inherently nonlinear - large-scale effects may be instigated by tiny initiating events, as noted by Maruyama, (1963), Gleick, (1987), and Ormerod, (1998).

It is not hard to find evidence of positive feedback instigating mutual causal behavior in organizations. The earliest discoveries date back to Roethlisberger and Dixon (1939) and Homans, (1950) - both dealing with the mutual influence of agents (members of informal groups), the subsequent development of groups, and the emergence of strong group norms that feed back to sanction agent behavior. Much of the discussion by March and Sutton (1997) focuses on the problems arising from the use of simple linear models for measuring performance - problems all due to mutual causal behavior of firms and agents within them. In a recent study of advanced manufacturing technology (AMT), Lewis and Grimes (1999) use a multiparadigm (postmodernist) approach. They study AMT from all of the four paradigms identified by Burrell and Morgan (1979). With each lens, that is, no matter which lens they use, they find evidence of mutual causal (positive feedback type coevolutionary) 9 behavior within firms. Many of the articles in the Organization Science special issue on coevolution (Lewin & Volberda, 1999) report evidence of microcoevolutionary behavior in organizations. Finally, a number of very recent studies of organization change show much evidence of coevo - lution between organization and environment and within organizations as well (Erakovic, 2002; Meyer & Gaba, 2002; Kaminska-Labbe & Thomas, 2002; Morlacchi, 2002; Siggelkow, 2002).

Both European and American perspectives are important. Phase transitions are often required to overcome the threshold-gate effects characteristic of most human agents - they don’t interact and react to just anything10. This in turn requires the adaptive tension driver to rise above Rc1 - which defines the threshold gate. Once these stronger than normal instigation effects overcome the threshold gates, then, assuming the other requirements are present (heterogeneous, adaptive learning agents, and so forth), positive feedback may start. Neither perspective seems both necessary and sufficient by itself, especially in social settings. External force effects and internal positive feedback processes are “co-producers,” to use Churchman and Ackoff’s (1950) term.

New method: Epistemology of computational models

Understanding how and why new structural order emerges in social systems has been at the core of management studies, sociology, and organization science for many decades, as evidenced by the following:

- Adam Smith’s exploration into the development of industrialization through division of labor;

- Early studies of bureaucracy (Weber, 1924/1947) and the evolution of professional management by Barnard, (1938);

- Research on the impact of internal structure on work group behavior (Roethlisberger & Dixon, 1939);

- Studies of bureaucracy (Blau & Scott, 1962; Crozier, 1964; Scott, 1998);

- Studies of the relationship between internal and external order (Lawrence & Lorsch, 1967;

- Analyses of strategic partnerships (Powell, 1990; Yoshino & Rangan, 1995);

- Ongoing management studies into the emergence of order and structure within entrepreneurial executive teams and firms (Bygrave & Hofer, 1991);

- Many studies of organizational network dynamics (Nohria & Eccles, 1992);

- Studies of organizations experiencing rapid change (Brown & Eisenhardt, 1997);

Studies of Italian industrial districts (Curzio & Fortis, 2002).

These “thick-description” studies (Geertz, 1973) exemplify the enduring importance of emergence in the study of organizations.

In contrast, various kinds of organizational order creation have also been studied via agent models to explain such phenomena as organizational learning (Carley, 1992; Carley & Harrald, 1997; Carley & Hill, 2001), organization design (Carley, et al., 1998; Levinthal & Warglien, 1999), network structuring (Carley, 1999b), organizational evolution (Carley & Svoboda, 1996; Morel & Ramanujam, 1999) and strategic adaptation (Carley, 1996; Gavetti & Levinthal, 2000; Rivkin, 2001), just to name just a few.

A fundamental difference between virtually all of the order-creation studies mentioned in the foregoing bullets, as compared with the studies mentioned in the previous paragraph is that most of the latter are based on computational experimental results whereas the former are not. This is just by way of illustrating that most of what our field has traditionally claimed to be true about organizational management and design is based on site visits (interviews and observations) and narrative studies, or data collected from the field after the fact and then analyzed via some kind of correlational method. Truth claims based on nonexperimental methods are notoriously suspect and generally fall outside the realm of long-accepted bases for asserting truth (Hooker, 1995; McKim & Turner, 1997; Curd & Cover, 1998), despite attempts to the contrary (Pearl, 2000).

In social and organizational science there is now a long list of people complaining about the “thin” (Geertz, 1973) ontological view of most experiments and statistical analyses and the wrong or inappropriate ontological view of organizational phenomena, an argument advocated by organizational post-positivists (Berger & Luckmann, 1966; Silverman, 1970; Lincoln, 1985) and more recently postmodernists (Reed & Hughes, 1992; Hassard & Parker, 1993; Alvesson & Deetz, 1996; Burrell, 1996; Chia, 1996; Marsden & Townley, 1996). Postmodernism, however, is now criticized as being anti-science (Holton, 1993; Norris, 1997; Gross & Levitt, 1998; Koertge, 1998; Sokal & Bricmont, 1998; McKelvey, 2003). Much of the fuel feeding the anti-experimental perspectives in organization science lies with the difficulty of setting up ontologically correct organizational experiments. This said, there is no escaping the centrality of models in effective science. Computational agent-based models have the threefold advantage that they are models, they are experiments, and they allow the study of order creation.

Model-centered science

Much has changed since the ceremonial death of logical positivism and logical empiricism at the Illinois Symposium in, 1969, adroitly described in Suppe’s second edition of The Structure of Scientific Theories (1977) - the epitaph on positivism. Parallel to the fall logical positivism and logical empiricism, we see the emergence of the Semantic Conception of Theories (Suppe, 1977). Suppe (1989: 3) says, “The Semantic Conception of Theories today probably is the philosophical analysis of the nature of theories most widely held among philosophers of science.” Semantic Conception epistemologists observe that scientific theories never represent or explain the full complexity of some phenomenon. A theory (1) “does not attempt to describe all aspects of the phenomena in its intended scope; rather it abstracts certain parameters from the phenomena and attempts to describe the phenomena in terms of just these abstracted parameters” (Suppe, 1977: 223); (2) assumes that the phenomena behave according to the selected parameters included in the theory; and (3) is typically specified in terms of its several parameters with the full knowledge that no empirical study or experiment could successfully and completely control all the complexities that might affect the designated parameters (see also Mitchell, 2004). Models comprise the core of the Semantic Conception. Its view of the theory-model-phenomena relationship is: (1) Theory, model, and phenomena are viewed as independent entities; (2) Science is bifurcated into two related activities, analytical and ontological, where theory is indirectly linked to phenomena via the mediation of models. The view presented here - with models as centered between theory and phenomena - that sets them up as autonomous agents, follows from Morgan and Morrison’s (2000) thesis. The course of science is as much governed by its choice of modeling technology as it is by theory and data.

Analytical Adequacy focuses on the theory-model link. It is important to emphasize that in the Semantic Conception ‘theory’ is always expressed via a model. ‘Theory’ does not attempt to use its ‘If A, then B’ epistemology to explain ‘real-world’ behavior. It only explains ‘model’ behavior. It does its testing in the isolated idealized world of the model (Mitchell, 2004). A mathematical or computational model is used to structure up aspects of interest within the full complexity of the real-world phenomena and defined as ‘within the scope’ of the theory. Then the model is used to elaborate the ‘If A, then B’ propositions of the theory to consider how a social system - as modeled might behave under various conditions.

Ontological Adequacy focuses on the mod-el-phenomena link. Developing a model’s ontological adequacy runs parallel with improving the theory-model relationship. How well does the model represent real-world phenomena? How well does an idealized wind-tunnel model of an airplane wing represent the behavior of a full sized wing in a storm? How well might a computational model from biology, such as Kauffman’s (1993) NK model, that has been applied to firms, actually represent coevolutionary competition in, say, the laptop computer industry. It therefore involves identifying various coevolutionary structures, that is, behaviors that exist in some domain and building these effects into the model as dimensions of the phase-space. If each dimension in the model adequately represents an equivalent behavioral dimension in the real world, the model is deemed ontologically adequate.

These kinds of coevolution, therefore, result in credible, i.e., more probable, science-based truth claims: (1) Theory-model coevolution; (2) Model-phenomena coevolution; and (3) The coevolution of both 1 and 2.

Agent-based computational experiments

Experiments are a continuing legacy of positivism and remain at the core of the new scientific realist aspect of philosophy of science (Bhaskar, 1975/1997). They are the standard against which other forms of truth-claims are compared (McKim & Turner, 1997), and they remain a cornerstone of modern philosophical debate (Curd & Cover, 1998). Experiments continue as the fundamental method of determining causal relations because they are the only method of clearly adding, deleting, or otherwise altering a variable to see if results change (Lalonde, 1986) - what Bhaskar (1975) calls a “contrived invariance” (see also Hooker, 1995).

The problem for organization scientists is that ‘real’ organizations can seldom if ever be recreated in a laboratory. Further, even when such attempts are made (Carley, 1996; Contractor, et al., 2000), the experiments are very thin replications of organizational complexity. Most importantly, it is very difficult, if not impossible, to delete with certainty potentially causal behavioral ‘rules’ that experimental subjects might be following. In addition, human organizational experiments are usually subject to time, size, number of rules actually ‘wiggled’, and organizational realism limitations. Finally, and perhaps most importantly, the study of emergent order in human experiments is very difficult: multiple replication conditions (such as environmental context effects and multiple rules held by subjects) have to be controlled, organizationally relevant path dependencies should be part of the experimental design, long enough duration for social structural emergence to occur needs to be allowed, a statistically relevant number of replications should be conducted, and so on.

Agent-based computational experiments offer organization scientists a virtual laboratory in which to test out theorized causal effects of organizational path dependencies, given varying environmental resources and constraints, artificial subjects (the agents) governed by known and only these rules, time periods long enough to allow agents and rules to change and emergent new structures (order) to appear, with a sufficient number of similar replications so as to allow a statistically relevant sampling. Needless to say, computational experiments have their own set of limitations. Not least of these have been the limitations of computers for handling large combinatorial spaces, agents with a sufficient number of governing rules, and lack of designed-in organizational complexities. Furthermore, much of the complexity of real organizations and current organizational theories does not show up in the models, especially earlier ones. One might reasonably conclude that most organization-relevant agent-based models have little bearing on organizational reality. On the other hand, one might also conclude that, appropriately, the models started with simpler, more stylized aspects of organizational functioning but that, studied over time, there is a progression toward improved replicational reality occurring. Math modeling has about a 300 year lead over agent-based computational modeling!

Agent-based modeling experiments are relatively new to organization studies, though some early examples exist (Cohen, et al., 1972; March, 1991). Computational models allow investigators to play out the nuances of theories over time. They also allow much clearer determinations of causal effects by allowing causal variables to be wiggled. Consequently, models offer a superb context for theory development. Ilgen and Hulin (2000: 7) go so far as to label computational modeling experiments as the “third discipline” - human experiments and correlation studies being the first two.

The use of agent-based models in social science has increased over the past decade 11. Agents can be at any level of analysis: atomic particles, molecules, genes, species, people, firms, and so on. The distinguishing feature is that the agents are not uniform. Instead they are probabilistically idiosyncratic (McKelvey, 1997). Therefore, at the level of human behavior, they fit the postmodernists’ ontological assumptions. Using heterogeneous agent-based models is the best way to ‘marry’ postmodernist ontology with model-centered science and the current epistemological standards of assumptions of effective modern sciences - specifically complexity science (Henrickson & McKelvey, 2002; McKelvey, 2002, 2003). There are no homogeneity, equilibrium, or independence assumptions. Agents may change the nature of their attributes and capabilities along with other kinds of learning. They may also create network groupings or other higher-level structures, i.e., new order.

The study by Contractor, et al. (2000) (discussed later) is a good example demonstrating two of the elements that improve the justification-logic credentials of agent-based modeling. First, this paper is particularly notable because each of its ten agent rules are grounded in existing empirical research. The findings of each body of research, clouded as they are by errors and statistics, are reduced to idealized, stylized facts that then become agent rules. The second justification approach in this study is that the model parallels a real-world human experiment. Their results focus on the degree to which the composite model and each of the ten agent rules predict the outcome of the experiment - some do, some don’t. Another approach, with a much more sophisticated simulation model, is one by LeBaron (2000, 2001). In this study, LeBaron shows that the baseline model “. is capable of quantitatively replicating many features of actual financial markets” (p. 19). Here the emphasis is mostly on matching model outcome results to real-world data rather than basing agent rules on stylized facts. A more sophisticated match between agent model and human experiment is one designed by Carley, (1996). In this study the agent model and people were given the same task. While the results do offer a test of model vs. real-world data, the comparison also suggests many analytical insights about organization design and employee training that only emerge from the juxtaposition of the two different experimental methods.

New ‘autonomous agent’ effects of models on the course of science

Models as autonomous agents12: There can be little doubt that mathematical models have dominated science since Newton. Further, mathematically constrained language (logical discourse), since the Vienna Circle circa, 1907, has come to define good science in the image of classical physics. Indeed, mathematics is good for a variety of things in science, but especially, it plays two key roles. In logical positivism - which morphed into logical empiricism (Suppe, 1977) - math supplied the logical rigor aimed at assuring the truth integrity of analytical (theoretical) statements. As Read, (1990) observes, the use of math for finding “numbers” actually is less important in science than its use in testing for rigorous thinking. But, as is wonderfully evident in the various chapters in the Morgan and Morrison (2000) anthology, math is also used as an efficient substitute for iconic models in building up a ‘working’ model valuable for understanding not only how an aspect of the phenomena under study behaves (the empirical roots of a model) and / or for better understanding the interrelation of the various elements comprising a transcendental realist explanatory theory (the theoretical roots).

Traditionally, a model has been treated as a more or less accurate “mirroring” of theory or phenomena (Cartwright, 1983) - as a billiard ball model might mirror atoms. In this role it is a sort of ‘catalyst’ that speeds up the course of science but without altering the chemistry of the ingredients. Morgan and Morrison (2000) take dead aim at this view, however, showing that models are autonomous agents that can, indeed, affect the chemistry. It is perhaps best illustrated in a figure supplied by Boumans, (2000). He observes that

Cartwright, in her classic, 1983 book “...conceive[s] models as instruments to bridge the gap between theory and data.” Boumans gives ample evidence that many ingredients influence the final nature of a model. Ingredients impacting models are metaphors, analogies, policy views, empirical data, math techniques, math concepts, stylized facts, and theoretical notions. Boumans’s analyses are based on business cycle models by Kalecki, Frisch and Tinbergen in the 1930s and Lukas, (1972) that clearly illustrate the warping resulting from ‘mathematical molding’ for mostly tractability reasons and the influence of the various non-theory and non-data ingredients.

Models as autonomous agents, thus, become so both from (1) math molding and (2) influence by all the other ingredients. Since the other ingredients could reasonably influence agent-based models as well as math models - as formal, symbol-based models, and since math models dominate formal modeling in social science (mostly in economics) - I now focus only on the molding effects of math models rooted in classical physics. As is evident from the four previously mentioned business cycle models, Mirowski’s, (1989) broad discussion (not included here), and Read’s (1990) analysis (below), the math molding effect is pervasive. Much of the molding effect of math as an autonomous model / agent, as developed in classical physics and economics, makes three heroic assumptions: (1) Mathematicians in classical physics made the ‘instrumentally convenient’ homogeneity assumption. This made the math more tractable; (2) Science in general, and in the social sciences especially econometricians (Greene, 2002), assume independence among agents (data points); and (3) Physicists principally studied phenomena under the governance of the 1st Law of Thermodynamics and, within this Law, made the equilibrium assumption. Here the math model accounted for the translation of order from one form to another and presumed all phenomena varied around equilibrium points13.

Math ’s molding effects on sociocultural analysis: Read’s (1990) analysis ofthe applications ofmath modeling in archaeology illustrates how the classical physics roots of math modeling and the needs of tractability give rise to assumptions that are demonstrably antithetical to a correct understanding, modeling, and theorizing of human social behavior. Though his analysis is ostensibly about archaeology, it applies generally to sociocultural systems. Most telling are assumptions he identifies that combine to show just how much social phenomena have to be warped to fit the tractability constraints of the rate studies framed within math molding process of calculus. They focus on universality, stability, equilibrium, external forces, determinism, and global dynamics at the expense of individual dynamics.

Given the molding effect of all these assumptions it is especially instructive to quote Read, the mathematician, worrying about equilibrium-based mathematical applications to archaeology and sociocultural systems:

- In linking “empirically defined relationships with mathematically defined relationships... [and] the symbolic with the empirical domain... a number of deep issues...arise... These issues relate, in particular, to the ability of human systems to change and modify themselves according to goals that change through time, on the one hand, and the common assumption of relative stability of the structure of .[theoretical] models used to express formal properties of systems, on the other hand... A major challengefacing effective - mathematical - modeling of the human systems considered by archaeologists is to develop models that can take into account this capacity for self-modification according to internally constructed and defined goals.”

- “In part, the difficulty is conceptual and stems from reifying the society as an entity that responds to forces acting upon it, much as a physical object responds in its movements to forces acting upon it. For the physical object, the effects of forces on motion are well known and a particular situation can, in principle, be examined through the appropriate application of mathematical representation of these effects along with suitable information on boundary and initial conditions. It is far from evident that a similar framework applies to whole societies.”

- “Perhaps because culture, except in its material products, is not directly observable in archaeological data, and perhaps because the things observable are directly the result of individual behavior, there has been much emphasis on purported ’laws’ of behavior as the foundation for the explanatory arguments that archaeologists are trying to develop. This is not likely to succeed. To the extent that there are ‘laws’ affecting human behavior, they must be due to properties of the mind that are consequences of selection acting on genetic information... ‘laws’ of behavior are inevitably of a different character than laws of physics such as F = ma. The latter, apparently, is fundamental to the universe itself; behavioral ‘laws’ such as ‘rational decision making’ are true only to the extent to which there has been selection for a mind that processes and acts upon information in this manner... Without virtually isomorphic mapping from genetic information to properties of the mind, searching for universal laws of behavior. is a chimera.”

Common throughout these and similar statements are Read’s observations about “the ability of [reified] human systems to change and modify themselves,” be “self-reflective,” respond passively to “forces acting” from outside, “manipulation by subgroups,” “self-evaluation,” “self-reflection,” “affecting and defining how they are going to change,” and the “chimera” of searching for “behavioral laws” reflecting the effects of external forces.

Just as the social sciences lagged behind when math was the supreme modeling approach, they are also lagging in their transition to agent-based models. Though citation rates may have picked up more recently, in 1997 there were some 18,000 natural science cites to nonlinear computational modeling, but only around 180 in economics and near 40 in sociology (Henrickson, 2004). As Henrickson and McKelvey (2002: 7288) wonder:

“How can it be that sciences founded on the mathematical linear determinism ofclassical physics have moved more quickly toward the use of nonlinear computer models than economics and sociology - where those doing the science are no different from social actors - who are the Brownian Motion?”

Computational modeling in organization science14

Lichtenstein and McKelvey (2005) note that there are over 300 agent-based models having relevance to organization studies. Maguire, et al. (forthcoming) list - 15 applications of just Kauffman’s (1993) NK model to organizational phenomena. Just to give credibility to the idea of agent models being applied to organizations, I briefly describe some examples below. As Lichtenstein and McKelvey (2005) observe, most models generate emergent networks; some generate emergent groups and supervenience effects; even fewer generate hierarchies and only two stretch a bit beyond these minimal stages of organizational order.

Cellular automata search grids: The oldest agent-based model is referred to as a cellular automata (CA) model. Agents exist in a search space whose size depends on the number of agents and rules. The search space is typically depicted as consisting of hills and valleys with, for example, higher agent fitness or intelligence represented as a peak. Agents having highest fitness may be scattered randomly across the space and separated by numerous agents having lower fitness. A particular agent, thus, runs the risk of ending up on a suboptimal peak during the course of its search attempts over some number of time periods. Usually agent interactions are limited to their ‘nearest neighbors’ - those agents directly adjacent to a specified agent. Agents usually have one output decision, depending on a couple of input signals. Often they only have one governing rule. As agents and rules increase, the search space grows geometrically, as does computer processing time. One of the most interesting is Kauffman’s NK model.

Modeling tunable NK landscapes: Kauffman’s (1993) NK Fitness Landscape, a well-known application of CA models, simulates a co-evolutionary process in which both the individual agents and the degree of interdependency between them are modeled over time. N refers to the number of agents in the model, and K refers to the density of agent interactions. According to the model, an agent’s adaptive fitness depends on its ability to identify and ‘climb to’ the highest level of fitness of its neighbors. However, due to the nearest-neighbor search limitation, an agent surrounded by neighbors all having lower fitness levels gets trapped on a local optimum that may be well below the highest system-wide optimum. Note that the agents are not independent of each other.

As individual agents change, they affect all other agents, thus altering some aspects of the nearest-neighbor landscape itself. In this way, the level of complexity ‘tunes’ the agents’ search landscape by altering the number and height of peaks and depths of valleys they encounter. It turns out that the degree of order in the overall landscape crucially depends on the level of K, the degree of system-wide interdependence, that is, complexity (Kauffman, 1993). According to Kauffman, as complexity increases, the number of peaks vastly increases in the landscape, while the difference between peaks and valleys diminishes, such that even though the pressure of Darwinian selection persists, emergent order cannot be explained by selection effects. He terms it complexity catastrophe. Instead, a moderate amount of complexity creates optimal rugged landscapes, which lead to the highest system-wide fitness levels.

Researchers have applied the NK model to business settings by exploring ways connectedness will bring an entire system to a higher level of fitness without locking it into a ‘catastrophe’ of interdependence (Levinthal, 1997). Moderate levels of interconnection can be achieved through modularization of the production process (Levinthal & Warglien, 1999), by keeping internal value chain interdependencies to levels just below their opponents’ (McKelvey, 1999), or by adopting strategies based on the industry-wide level of firm interdependence (Baum, 1999). Rivkin (2000, 2001) shows that moderate complexity prevents spillover effects while at the same time fostering intrafirm sharing of new knowledge. In an empirical test of the NK application to innovation, Fleming and Sorenson (2001: 1025) show “invention can be maximized by working with a large number of components that interact to an intermediate degree.”

Design sequencing: Siggelkow and Levinthal (2003) use Kauffman’s NK model to tease out some of the dynamics arising when firms mix centralization-decentralization or exploration-exploitation designs. They model performance results stemming from three structural designs: unchanging centralization, unchanging decentralization, and “temporary decentralization with subsequent reintegration” - the latter termed “reintegrator firms.” Some of their key findings are:

- When environments are significantly changing, reintegrator firms outperform centralized firms;

- As firms become more complex - more degrees of freedom and cross-departmental interdependencies - reintegrators offer performance advantages;

- The length of the time of decentralization before reintegration has a strong effect - in the model, performance peaks when decentralization extends to 40 periods and then flattens out;

- Temporary creation of cross-divisional interdependencies - termed “scrambling” in the article - produces the highest performance. The authors don’t mention it, but this seems like temporary creation of weak ties (Granovetter, 1973, 1985), which fosters novelty in the short term, while allowing firms to reinstall efficiency objectives once the period of exploration is completed.

In contrast to static “balance” approaches, they conclude: “.exploration and stability are not achieved simultaneously through distinct organizational features.. .but sequentially by adopting different organizational structures.” This model supports the Thomas, et al. (2005) case study.

Modeling learning rates: Yuan and McKelvey (2004) first dock15 their model against results from Kauffman’s prior work, replicating Kauffman’s original results to correlations of 0.976. They use his NK model to test the hypotheses that communication interactivity is nonlinearly related to both amount and rate of group learning over time. Kauffman’s complexity catastrophe effect applies here as well. They find that amount of group learning is a direct function of size, N, but is curvilinearly related to K - highest in the middle of an inverted U-shaped curve. They find that rate of learning is slowest in the middle of a U-shaped curve. However, density in communication interactivity is not independent of group size. Once they adjust for this effect via standardization of K by N-1, they find that the curvilinear effect disappears, but the catastrophe effect continues as a function of two linear variables: Rate of group learning remains a positive linear function of communication interactivity, but amount of learning becomes a negative linear function of interactivity density. Among other things, they also find that altering the distribution of communication by creating isolates and stars in groups has a statistically significant effect on the coevolutionary development of group-level learning over time.

Holland’s genetic algorithms: An important advance in modeling emergence occurs through the use of genetic algorithms (GAs), invented by Holland (1975, 1995). GAs allow agents to learn and change over time by changing the rules governing their behavior: “Agents adapt by changing their rules as experience accumulates” (Holland, 1995: 10). Axelrod and Cohen (2000: 8) broaden the implications of GAs, asserting that:

“...each change of strategy by a worker alters the context in which the next change will be tried and evaluated. When multiple populations of agents are adapting to each other, the result is a coevolutionary process.”

In biological GAs, agents appear to ‘mate’ and produce ‘offspring’ that have different ‘rule-strings’ (genetic codes, blueprints, routines, competencies) as compared with their parents. In organizational applications, agents’ rule-strings change over time (i.e., across artificial generations) without agent replacement and without having ‘children’. The upward causal effects of agents are defined by these rules. Whereas CA models typically are limite d to a relatively few rules and agents - because the landscape grows geometrically each time each is added - GAs allow many agents to have many rules (Macy & Skvoretz, 1998). New rule-strings can have varying numbers of rules retained or recombined from prior agent’s rules, thus allowing the increased evolutionary fitness of complex processes such as decision-making and learning, along with recombinations of diverse skills. Two organizational GA applications are described next.

Simulated coordination models: Paul, et al. (1996) model adaptations to organizational structure by examining the adaptation of financial trading firms (groups). Their firms / groups survive in a financial market environment; they solidify out of networks to consist of from 1 to 9 constituent agents, and each agent has a different rule-set for buying and selling financial instruments or doing nothing. Firms may activate or deactivate their agents, or form networks of seemingly better performing agents from prior periods. In an efficient market performance climate with a 50% probability of success, their model firms beat the market 60% of the time. In this model the behavior of agents (components) can be altered by firm-level goals, thus allowing firms to better perform in the market environment. Further, due to the coevolution of up-and downward causality, results are not deducible from the initial agent configurations and rules.

Another model examines the classic proposition that coordination, while necessary to accomplish interdependent tasks, is costly. Crowston’s (1996) GA model tests this hypothesis by simulating organizations consisting of: agents; in subgroups; in a market; with variable task interdependency. This results in upward, downward, and horizontal causalities, i.e., causal intricacy. Bottom-level agents have to perform their tasks in a specific length of time; agents who coordinate may expedite their tasks, but the cost of coordination means a lessening of their time allotment according to the following rule: if an agent ‘talks’ to all the other agents all the time there is no time left to accomplish its tasks. Results show that organizations and / or their employee agents do in fact minimize coordination costs through organizing in particular ways. His study is an example of a GA model being used to test a classic normative statement by setting up a computational experiment that allows groups to emerge as appropriate. It also includes causal intricacy and coevolutionary causality (for comparison, see Thomas, et al., 2004).

Multi-level models: Quite possibly the most famous example of agent-based modeling is Epstein and Axtell’s Growing Artificial Societies (1996). Their model is called “Sugarscape.” They boil an agent’s behavior down to one simple rule: “Look around as far as your vision permits, find the spot with the most sugar, go there and eat the sugar” (p. 6). Agents search on a CA landscape but they sort of have sex, reproduce offspring, and begin to hold genetic, identity, and culture identification tags according to a genetic algorithm. This model not only builds social networks, but also higher-level groups emerge. These groups develop cultural properties; once cultures form they can supervene and alter the behavior and groupings of agents. Epstein and Axtell’s simulation includes four distinct levels: agents, groupings, cultures, and the overall Sugarscape environment. The Sugarscape elements include agents, emergent groups, higher-level groupings, emergent culture, multiple causalities, and environmental resources and constraints. Though theirs is ostensibly a model of an economy, it easily translates into the intraorganizational market economy highlighted in Halal and Taylor (1999).

Carley and colleagues have produced some of the most sophisticated computational models to date. They have been validated against experimental lab studies (Carley, 1996), and archival data on actual organizations (Carley & Lin, 1995). The most unique feature of the Carley models is that agents have cognitive processing ability - individual agents and the organization as a whole can remember past choices, learn from them, and anticipate and project plans into the future. These models combine elements of CA, GA, and neural networks (for the latter, see Haykin, 1998), as does LeBaron’s (2000) stock market model.

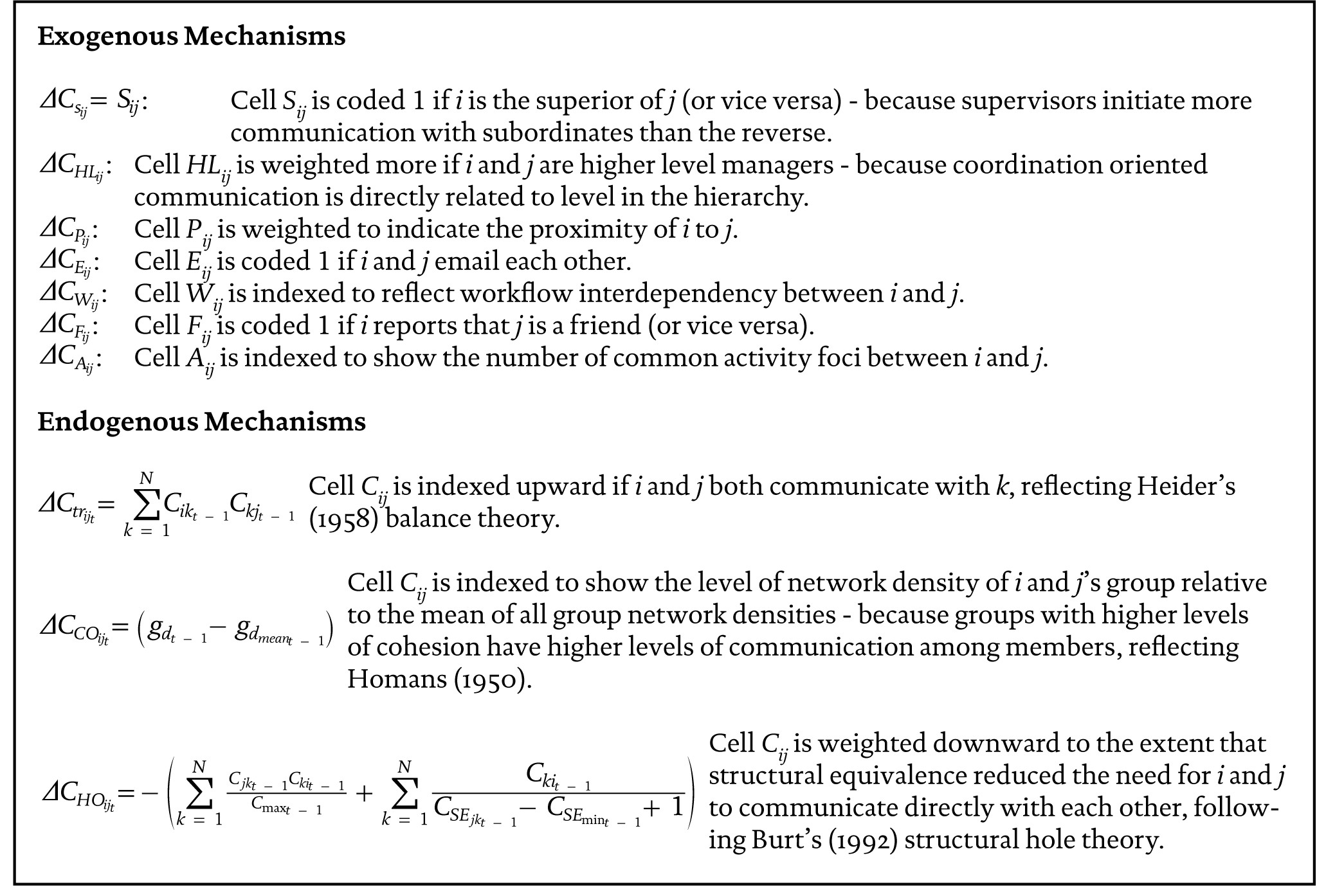

Table 2 Model substructures defined After Contractor et al., 2000

In Carley’s CONSTRUCT (1991) and CONSTRUCT-O (Carley & Hill, 2001) models, simulated agents have a position or role in a social network and a mental model consisting of knowledge about other agents. Agents communicate and learn from others with similar types of knowledge. CONSTRUCT-O allows for the rapid formation of subgroups and the emergence of culture, which, when it crystallizes, supervenes to alter agent coevolution and search for improved performance. These models show the emergence of communication networks, the formation of stable hierarchical groups and the supervenience of group effects on component agent behaviors - network driven, groups solidify, and downward causality emerges.